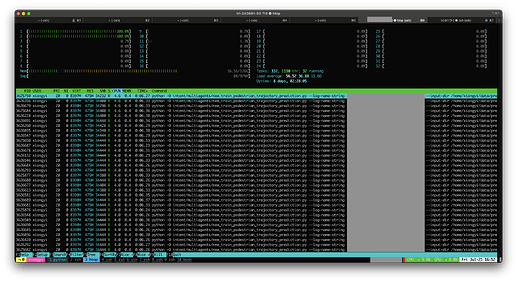

I’m having this weird issue where only 2,3 cpu cores are use by torch.multiprocessing. It only seems to happen on our new machine with i9-13900K CPU.

It works fine on our older Xeon CPUs (100% on 50 cores as expected, in the data preprocessing stage).

The code is the same.

I’m using pytorch 2.0.1, both machine running Ubuntu 20.04