I noticed a strange behavior when using multiprocessing. My main process sends data to a queue. Two spawned processes read from the queue and create cuda tensors. If I print the tensors I see the process running on gpu-1 copied to gpu-0 in nvidia-smi. Hence it looks as if I had 3 processes, 2 on gpu-0 and 1 on gpu-1. Two of this 3 processes have the same p-id, namely the p-id of the process running on gpu-1. Now if I print the tensor size or anything else, or if I don’t print anything at all I end up having only 2 processes each running on one gpu. The behavior is reproducible using multiprocessing as well as torch.multiporcessing. See code below for reproduction.

Does anyone have an idea why this is?

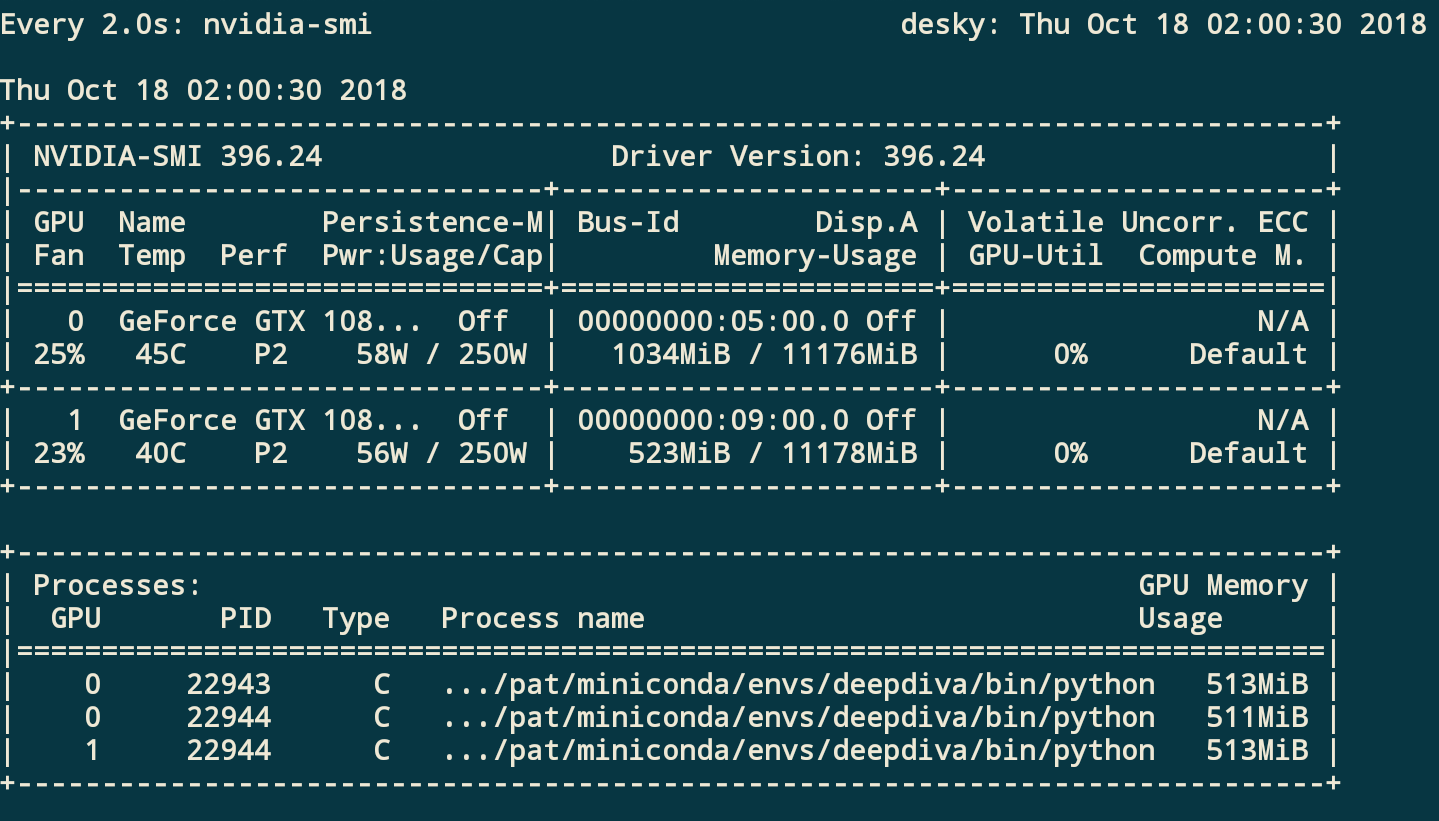

nvidia-smi output when printing tensor:

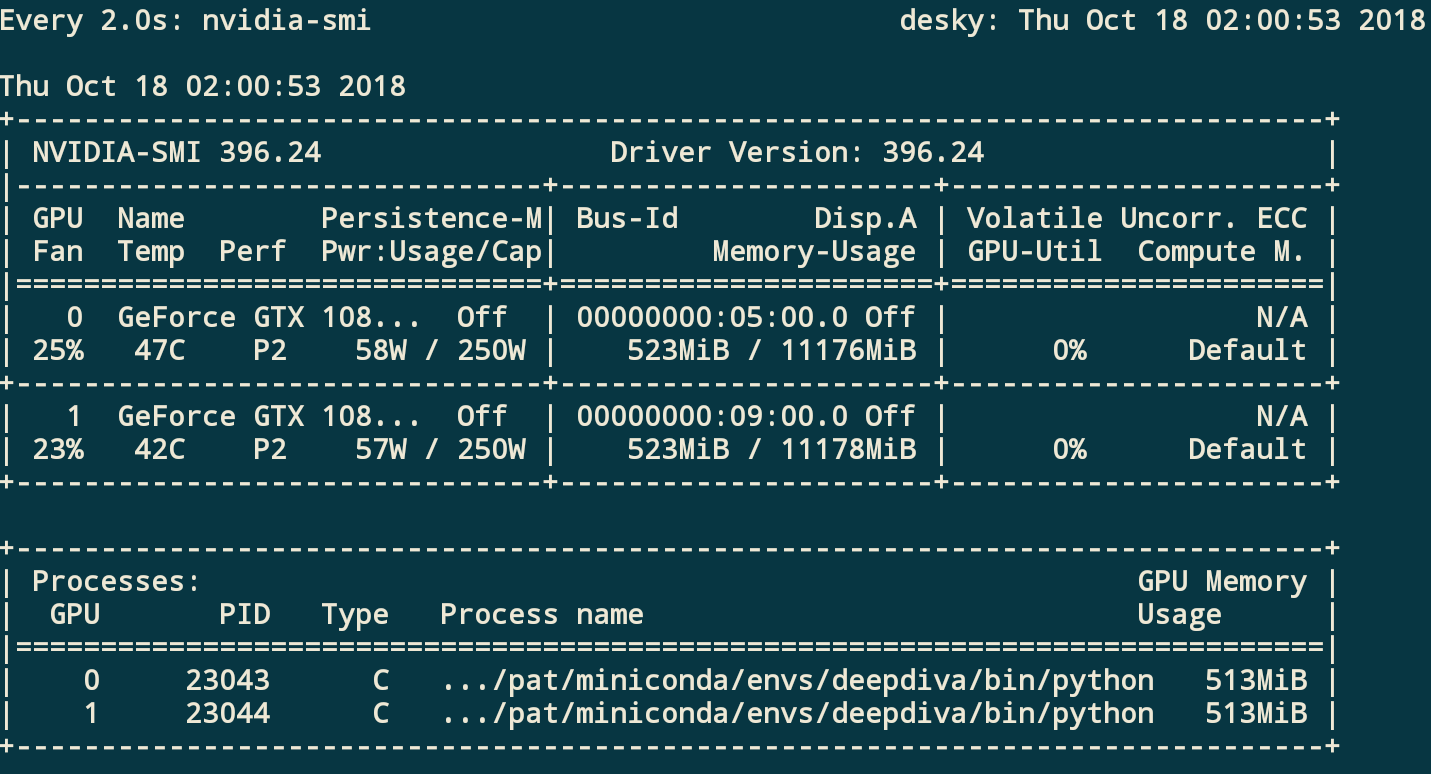

nvidia-smi output otherwise:

Code:

import torch

import torch.multiprocessing as mp

def run(q, dev):

t = torch.tensor([1], device=dev)

for data in iter(q.get, None):

new_t = torch.tensor([data], device=dev)

t = torch.cat((t, new_t), dim=0)

# Causes the Copy

print(t)

# Any of the following doesn't causes the copy

print(t.size())

print('t')

continue

q.put(None)

if __name__ == '__main__':

ctx = mp.get_context('spawn')

q = ctx.Queue()

devices = [

torch.device('cuda:{}'.format(i))

for i in range(torch.cuda.device_count())

]

processes = [

ctx.Process(target=run, args=(q, dev))

for dev in devices

]

for pr in processes:

pr.start()

for d in range(1, 1000000):

q.put(d)

q.put(None)

for pr in processes:

pr.join()