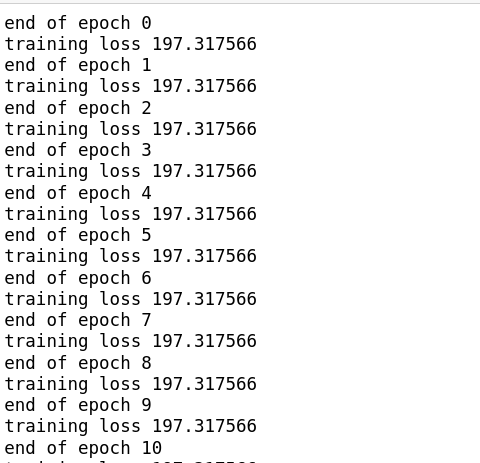

I’m implemnting a simple autoencoder for Mnist dataset but the loss still the same and no imporvment

this is the full code :

class ModelCAE(nn.Module):

def __init__(self):

super().__init__()

# enocder

#conv layers

self.encoder = nn.Sequential(

nn.Conv2d(1,16,3,padding=1),

nn.ReLU(True),

nn.MaxPool2d(2,2),

nn.Conv2d(16,4,3,stride=2,padding=1),

nn.ReLU(True),

)

# decoder

# conv layers

self.decoder = nn.Sequential(

nn.ConvTranspose2d(4, 16,2,stride=2),

nn.ReLU(True),

nn.ConvTranspose2d(16, 1,2, stride=2),

)

def forward(self,x):

x = self.encoder(x)

x = self.decoder(x)

return x

model = ModelCAE().to("cuda")

# loss function

def PSNR(img,target):

img = torch.sigmoid(img)

mse = torch.mean((img-target)**2)

return 20 * torch.log10(255.0 / torch.sqrt(mse))

# omptimizer

optimizer = torch.optim.Adam(ModelCAE().parameters(),lr = 1e-1,weight_decay = 1e-8)

epochs = 20

outputs = []

losses = []

for epoch in range(epochs):

train_loss =0.0

for index,batch in enumerate(train_loader):

imgs,_ = batch

imgs = imgs.to('cuda')

optimizer.zero_grad()

reconstructed = model(imgs)

loss = PSNR(imgs,reconstructed)

loss.backward()

optimizer.step()

losses.append(loss)

train_loss += loss*imgs.size(0)

train_loss = train_loss / len(train_loader)

print(f"end of epoch {epoch}")

print(f"training loss {train_loss:.6f}")