I am creating a layer which will acts as both embedding as well as linear layer. This is to tie the weights.

class embedding_linear(nn.Module):

def __init__(self,vocab_size, dmodel=dmodel, pad=True):

'''

Tied weights for decoder embedding layer and pre-softmax linear layer.

vocab_size: size of vocabulary used. It may be different for both source and target

dmodel: dimension of the word vector

pad: the pad index in the vocabulary

'''

super(embedding_linear,self).__init__()

self.dmodel = dmodel

self.weights = nn.Parameter(torch.Tensor(vocab_size,dmodel))

self.bias = nn.Parameter(torch.Tensor(dmodel))

self.weights.data.normal_(-1,1)

if pad:

self.pad_idx = 0

self.weights.data[0].fill_(0)

else:

self.pad_idx = -1

def forward(self, inputs, emb=True):

if emb:

outputs = F.embedding(inputs, self.weights * (self.dmodel ** 0.5), self.pad_idx, False,2, False, False)

else:

outputs = F.linear(inputs,self.weights.t(),self.bias)

return outputs

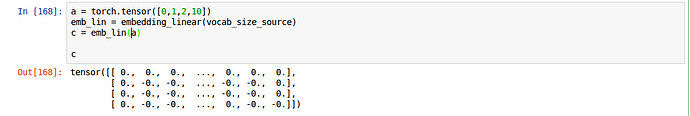

But when I test the layer with the following command it always returns zeroes

both negative and positive zeroes

initially I thought it must be precision problem and tried casting the outputs to other types by using the .type(), But nothing changed.

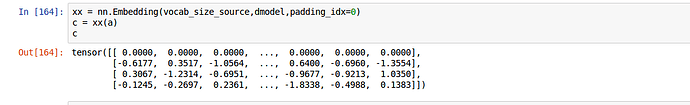

I then tried using the official embedding layer and got this output

where it was not all zeroes.

I also matched my implemention from the official repo and they were almost same.

Please tell me what to do? and why is it returning all zeroes.