Hello guy , so currenly im struggling in my research about deepfake detection. My dataset is DFCup Taken form IEEE signal processing cup last year with imbalance real and fake class (approximately 1:5) . At first im following a paper and implement them method.I did handle imbalance like the paper author did. 100 batch of 256 images. The notebook i used was 200 batch of 128 images but i have tested 100 batch but the bad result is the same.

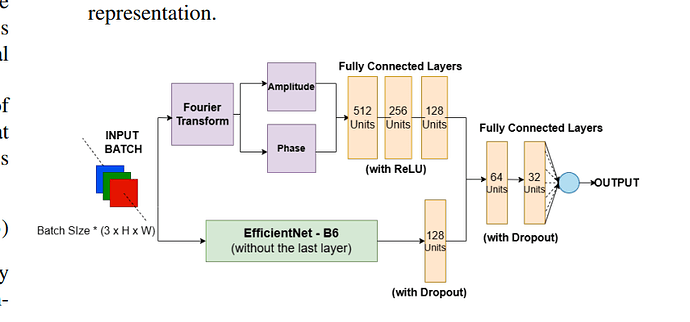

I am working on a deepfake detection model that fuses EfficientNet (Spatial) and a custom FFT branch (Frequency).

The Problem: Initially, I fed standard normalized inputs to both branches, but performance was poor. Suspecting that normalization (mean/std subtraction) distorts the frequency spectrum, I modified the forward pass to feed unnormalized (raw) images to the FFT branch while keeping normalized images for EfficientNet.

However, even with raw inputs for the FFT branch, the model performance is still not improving. The result just around 50% which it all predict Fake or all Real :”<

I am looking forward on any advice, thanks you guy very much.

Last but not least let me apologize because im not really good at english :”<,I have struggle this problem all the day and decided to post here.

Here is my NoteBook and bellow are the combine model architecture