Here is the part of code

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

parser = argparse.ArgumentParser()

parser.add_argument('-j', '--workers', default=12, type=int, metavar='N', help='number of data loading workers')

parser.add_argument('--epochs', default=1000, type=int, metavar='N', help='number of total epochs to run')

parser.add_argument('--start-epoch', default=0, type=int, metavar='N', help='manual epoch number (useful on restarts)')

parser.add_argument('-b', '--batch-size', default=40, type=int, metavar='N')

parser.add_argument('--lr', '--learning-rate', default=0.001, type=float, metavar='LR', dest='lr')

parser.add_argument('--momentum', default=0.9, type=float, metavar='M')

parser.add_argument('--wd', '--weight-decay', default=1e-1, type=float, metavar='W', dest='weight_decay')

parser.add_argument('-p', '--print-freq', default=10, type=int, metavar='N', help='print frequency')

parser.add_argument('--resume', default=None, type=str, metavar='PATH', help='path to latest checkpoint')

parser.add_argument('--data_set', type=int, default=1)

parser.add_argument('--gpu', type=str, default='0')

args = parser.parse_args()

now = datetime.datetime.now()

time_str = now.strftime("[%m-%d]-[%H:%M]-")

project_path = './'

log_txt_path = project_path + 'log/' + time_str + 'set' + str(args.data_set) + '-log.txt'

log_curve_path = project_path + 'log/' + time_str + 'set' + str(args.data_set) + '-log.png'

checkpoint_path = project_path + 'checkpoint/' + time_str + 'set' + str(args.data_set) + '-model.pth'

best_checkpoint_path = project_path + 'checkpoint/' + time_str + 'set' + str(args.data_set) + '-model_best.pth'

os.environ["CUDA_VISIBLE_DEVICES"] = args.gpu

pretrained_checkpoint_path = './pretrain_DFEW.pth'

def SoftCrossEntropy(inputs, target, reduction='average'):

log_likelihood = -torch.log_softmax(inputs, dim=1)

batch = inputs.shape[0]

if reduction == 'average':

loss = torch.sum(torch.mul(log_likelihood, target)) / batch

else:

loss = torch.sum(torch.mul(log_likelihood, target))

return loss

def main():

best_acc = 0

recorder = RecorderMeter(args.epochs)

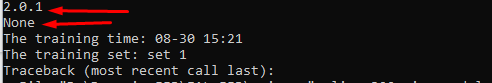

print('The training time: ' + now.strftime("%m-%d %H:%M"))

print('The training set: set ' + str(args.data_set))

with open(log_txt_path, 'a') as f:

f.write('The training set: set ' + str(args.data_set) + '\n')

# create model and load pre_trained parameters

model = GenerateModel()

model = torch.nn.DataParallel(model).cuda()

##CE_LOSS, keepdim

criterion = nn.CrossEntropyLoss(reduction='none').cuda()

optimizer = torch.optim.SGD(model.parameters(), args.lr, momentum=args.momentum, weight_decay=args.weight_decay)

#lr_decay

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=7, gamma=0.5)

#scheduler = torch.optim.lr_scheduler.CosineAnnealingWarmRestarts(optimizer, T_0=10)

can anyone please tell me where is the error?

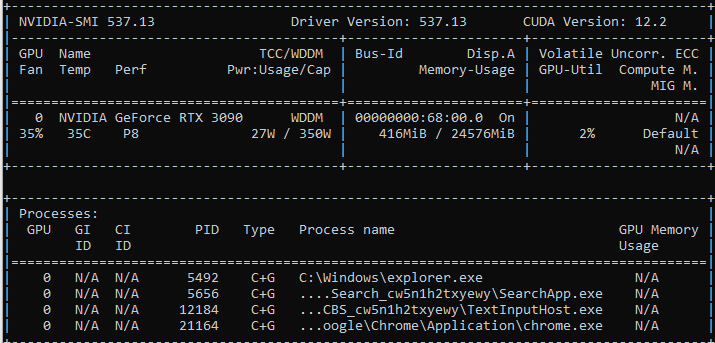

I also have the updated driver of my cuda