Hi all, firstly, sorry for my english, i am still learning.

I am trying my first object detection proyect. For this propose i am following a example from the official pytorch website but i decided to use my own images, concretly the dataset contains 2 airplanes:

https://pytorch.org/docs/stable/torchvision/models.html#faster-r-cnn

This is my code, where as the official site, i dowload a pretrained model

model = torchvision.models.detection.fasterrcnn_resnet50_fpn(pretrained=True)

Then i prepared my dataset

set_data = torchvision.datasets.ImageFolder(“/home/mjack3/Imágenes”,

transform = transforms.Compose(

[transforms.Resize([200, 200]),

transforms.ToTensor(),

]))

testloader = torch.utils.data.DataLoader(set_data, batch_size=2)

img, label = next(iter(testloader))

And finally i feed my model

predictions = model(img)

predictions

Results for 2 images:

[{‘boxes’: tensor([[ 2.1480, 41.3460, 200.0000, 157.1328],

[ 16.6714, 144.5215, 36.9665, 152.5237],

[178.3848, 143.8716, 198.9536, 150.1973],

[ 15.4439, 145.8342, 28.8368, 152.8024],

[ 0.4650, 144.6138, 14.9021, 152.9334],

[ 38.4139, 144.2619, 47.7382, 152.0511],

[ 26.9428, 144.4103, 37.6303, 151.7255],

[ 29.1579, 143.9854, 55.7968, 152.4163],

[ 0.6116, 144.8602, 15.0447, 152.9558],

[ 31.8172, 145.3369, 45.8835, 151.8564],

[179.8206, 143.0551, 191.4160, 149.7137],

[ 15.6531, 144.1850, 41.4935, 152.6716],

[ 0.0000, 65.7286, 110.4369, 149.1068],

[ 32.3294, 145.6019, 39.6292, 151.9699],

[ 34.2337, 142.1913, 50.6896, 151.5474],

[ 35.4431, 145.8370, 40.0628, 151.6249],

[ 14.8859, 145.5617, 22.5705, 152.6457],

[ 93.2531, 144.5989, 108.6908, 151.4838],

[ 30.6879, 145.0558, 36.9574, 151.1745],

[ 10.0094, 144.1053, 51.3472, 152.9482],

[ 35.6446, 143.2554, 48.3307, 151.7106],

[ 20.3258, 146.4695, 26.9378, 152.7105],

[145.7043, 118.8403, 156.8973, 149.5791]], grad_fn=),

‘labels’: tensor([5, 3, 3, 3, 3, 3, 3, 3, 8, 3, 3, 8, 5, 3, 3, 3, 3, 3, 3, 3, 8, 3, 1]),

‘scores’: tensor([0.9896, 0.8164, 0.6273, 0.4471, 0.4334, 0.3455, 0.1999, 0.1908, 0.1772,

0.1532, 0.1505, 0.1308, 0.1236, 0.1062, 0.0980, 0.0979, 0.0968, 0.0916,

0.0915, 0.0851, 0.0832, 0.0646, 0.0511], grad_fn=)},

{‘boxes’: tensor([[136.1270, 120.9056, 147.9458, 131.6058],

[136.2942, 120.7577, 144.5860, 128.0632],

[139.5699, 123.0861, 146.5174, 131.7614]], grad_fn=),

‘labels’: tensor([5, 5, 5]),

‘scores’: tensor([0.9813, 0.5450, 0.2220], grad_fn=)}]

So the idea is, getting the maximum score class index for getting the position of the label and the bounding box in this way:

_, index_pred = torch.max(predictions[0][“scores”].data, 0)

label_pred = predictions[0][“labels”][index_pred].item()

bb1 = predictions[0][“boxes”][index_pred].data.numpy()

Now is time of print results, this is what i tried:

rect = patches.Rectangle((bb1[0], bb1[1]),bb1[2],bb1[3],linewidth=2,edgecolor=‘r’,facecolor=‘none’)

This is what i get for each predicted image:

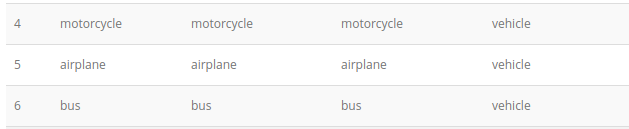

In all the cases, i get 5 as index, wich is in COCO the airplane but the bboxes are not shown correctly.

What am i doing wrong?