II want a RNN model to predict a sequence like below:

[1,2] [2,3] [3,4] ...

t1 t2 t3

which means if i give it time step t1 and t2, it will predict value of time step t3.

I used 3x5x2 mini-batches that each columm is a sequence of 3 time steps and its depth is value of time steps.

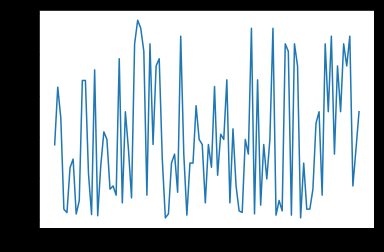

The model ran but when it’s done, the MSEloss just looked like a mess(i logged loss each 100 epoch):

Here is my model.

class Model(nn.Module):

def __init__(self, input_size, batch_size, hidden_size, num_layers=1, dropout=1):

super(Model, self).__init__()

self.hidden_size = hidden_size

self.batch_size = batch_size

self.zero_hidden()

self.lstm = nn.LSTM(input_size=input_size, hidden_size=hidden_size, num_layers=num_layers, dropout=dropout)

self.linear1 = nn.Linear(hidden_size,20)

self.linear2 = nn.Linear(20,10)

self.linear3 = nn.Linear(10,3)

self.relu = nn.ReLU()

self.sigmoid = nn.Sigmoid()

self.dropout = nn.Dropout(p=0.2)

def zero_hidden(self):

self.hidden = (autograd.Variable(torch.zeros(1, self.batch_size, self.hidden_size)),

autograd.Variable(torch.zeros(1, self.batch_size, self.hidden_size)) )

def forward(self, seq):

lstm_out, self.hidden = self.lstm(seq, self.hidden)

hidden1 = self.sigmoid(self.linear1(lstm_out))

hidden2 = self.relu(self.linear2(hidden1))

hidden3 = self.linear3(hidden2)

return hidden3