Epoch [1/5], Iter [1/51200] TotalLoss: 14.3430 Loss1: 3.4093 Loss2: 3.7797 Loss3: 3.4102 Loss3: 3.7438

Epoch [1/5], Iter [2/51200] TotalLoss: 9.1436 Loss1: 2.0375 Loss2: 2.9503 Loss3: 2.7624 Loss3: 1.3935

Epoch [1/5], Iter [3/51200] TotalLoss: 12.7019 Loss1: 3.1669 Loss2: 3.1706 Loss3: 3.1461 Loss3: 3.2183

Epoch [1/5], Iter [4/51200] TotalLoss: 199.0285 Loss1: 45.2067 Loss2: 60.3769 Loss3: 52.5529 Loss3: 40.8920

Epoch [1/5], Iter [5/51200] TotalLoss: 13.5216 Loss1: 3.3813 Loss2: 3.3809 Loss3: 3.3761 Loss3: 3.3833

Epoch [1/5], Iter [6/51200] TotalLoss: 12.3115 Loss1: 3.0906 Loss2: 3.0759 Loss3: 3.0606 Loss3: 3.0844

Epoch [1/5], Iter [7/51200] TotalLoss: 9.6911 Loss1: 2.4250 Loss2: 2.0567 Loss3: 2.1717 Loss3: 3.0377

Epoch [1/5], Iter [8/51200] TotalLoss: 11.4812 Loss1: 2.8772 Loss2: 2.8731 Loss3: 2.8619 Loss3: 2.8690

Epoch [1/5], Iter [9/51200] TotalLoss: 7.7518 Loss1: 1.9965 Loss2: 1.8900 Loss3: 1.9414 Loss3: 1.9240

Epoch [1/5], Iter [10/51200] TotalLoss: 5.8163 Loss1: 1.3725 Loss2: 1.4375 Loss3: 1.5680 Loss3: 1.4383

Epoch [1/5], Iter [11/51200] TotalLoss: 5.2633 Loss1: 1.3306 Loss2: 1.3322 Loss3: 1.2640 Loss3: 1.3365

Epoch [1/5], Iter [12/51200] TotalLoss: 3.2123 Loss1: 0.8192 Loss2: 0.7681 Loss3: 0.7401 Loss3: 0.8849

Epoch [1/5], Iter [13/51200] TotalLoss: 2.6675 Loss1: 0.6967 Loss2: 0.6703 Loss3: 0.6426 Loss3: 0.6579

Epoch [1/5], Iter [14/51200] TotalLoss: 2.8774 Loss1: 0.7332 Loss2: 0.7385 Loss3: 0.6811 Loss3: 0.7245

Epoch [1/5], Iter [15/51200] TotalLoss: 2.8546 Loss1: 0.7366 Loss2: 0.7264 Loss3: 0.7202 Loss3: 0.6713

Epoch [1/5], Iter [16/51200] TotalLoss: 2.7758 Loss1: 0.6806 Loss2: 0.6549 Loss3: 0.7345 Loss3: 0.7058

Epoch [1/5], Iter [17/51200] TotalLoss: 2.8139 Loss1: 0.7092 Loss2: 0.6574 Loss3: 0.7800 Loss3: 0.6674

Epoch [1/5], Iter [18/51200] TotalLoss: 2.7282 Loss1: 0.6225 Loss2: 0.6997 Loss3: 0.7237 Loss3: 0.6823

Epoch [1/5], Iter [19/51200] TotalLoss: 2.8259 Loss1: 0.7428 Loss2: 0.7204 Loss3: 0.6845 Loss3: 0.6783

Epoch [1/5], Iter [20/51200] TotalLoss: 2.5689 Loss1: 0.6701 Loss2: 0.6525 Loss3: 0.6837 Loss3: 0.5626

Epoch [1/5], Iter [21/51200] TotalLoss: 2.7335 Loss1: 0.6944 Loss2: 0.7051 Loss3: 0.6600 Loss3: 0.6740

Epoch [1/5], Iter [22/51200] TotalLoss: 2.8239 Loss1: 0.6977 Loss2: 0.7478 Loss3: 0.6584 Loss3: 0.7199

Epoch [1/5], Iter [23/51200] TotalLoss: 2.7279 Loss1: 0.7237 Loss2: 0.6032 Loss3: 0.6741 Loss3: 0.7270

Epoch [1/5], Iter [24/51200] TotalLoss: 2.6124 Loss1: 0.6750 Loss2: 0.6018 Loss3: 0.6210 Loss3: 0.7146

Epoch [1/5], Iter [25/51200] TotalLoss: 2.9644 Loss1: 0.7071 Loss2: 0.6792 Loss3: 0.7112 Loss3: 0.8669

Epoch [1/5], Iter [26/51200] TotalLoss: 3.0921 Loss1: 0.6923 Loss2: 0.7369 Loss3: 0.6726 Loss3: 0.9902

Epoch [1/5], Iter [27/51200] TotalLoss: 3.1947 Loss1: 0.7637 Loss2: 0.8234 Loss3: 0.7893 Loss3: 0.8183

Epoch [1/5], Iter [28/51200] TotalLoss: 2.9257 Loss1: 0.6981 Loss2: 0.7647 Loss3: 0.6602 Loss3: 0.8028

my network is vgg like artitecture and the task is to recognize captcha

it’s a multi-label task by using cnn.

but it didn’t work, it cannot even fit two image by training a lot of steps…

How is defined your Loss function?

I have a similar problem where I have a simple model which won’t overfit despite not using regularising techniques like dropout.

My model looks as follows:

Net(

(feature_extractor): Sequential(

(0): Conv2d(1, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): Conv2d(64, 128, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(3): ReLU()

(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(6): Conv2d(128, 256, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(7): ReLU()

(8): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(classifier): Sequential(

(0): Linear(in_features=65536, out_features=256, bias=True)

(1): ReLU()

(2): BatchNorm1d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(3): Linear(in_features=256, out_features=1, bias=True)

)

)

and my training loop looks as follows:

lr = np.linspace(0.01,1./(1000**2),25)

torch.backends.cudnn.benchmark = True

torch.backends.cudnn.fastest = True

start = time.time()

model.train()

train_loss = []

train_accu = []

i = 0

for epoch in range(25):

optimizer = optim.Adam(model.parameters(), lr=lr[epoch])

for image, label in train_loader:

data, target = image.float().cuda(), label.float().cuda()

optimizer.zero_grad()

output = model(data)

loss = F.mse_loss(output, target.view(output.size()))

loss.backward() # calc gradients

train_loss.append(loss.data[0]) # Calculating the loss

optimizer.step() # update gradients

prediction = torch.floor(output).int()

accuracy = (prediction.eq(target.view(output.size()).int()).sum().float()/batch_size)*100

train_accu.append(accuracy)

if i % 10 == 0:

print('Epoch:',str(epoch),'Train Step: {}\tLoss: {:.3f}\tAccuracy: {:.3f}'.format(i, loss.data[0], accuracy))

#print('TARGET:',target)

#print('OUTPUT:',output.view(target.size()))

i += 1

end = time.time()

print('TRAIN TIME:')

print('%.2gs'%(end-start))

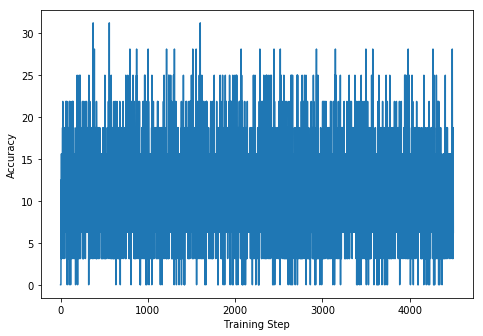

I would have thought that after 10 iterations the model would have at least started to increase in accuracy towards overfitting but so far I’m just getting a bouncing of accuracy below ~30%.

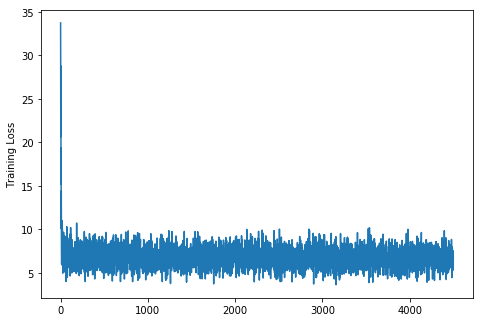

Loss:

Accuracy:

TL,DR:

mse_loss

Adam optimizer

Learning rate decay

No dropout (but do include batch norm)

Not overfitting after 10 epochs.

Thanks in advance for any guidance on this issue!

Could you try to change the learning rate using one optimizer and changing its param_groups[0]['lr'] or alternatively torch.optim.lr_scheduler?

Currently you are re-initializing it in each epoch, thus your optimizer will lose its accumulated momentum etc.

Maybe it won’t have a big effect, but it’s worth a first shot!

Thanks for the reply, I’m now using:

optimizer = optim.Adam(model.parameters(), lr=0.01)

scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=1, gamma=0.1)

and for training I simply include the line:

scheduler.step()

under the for epoch in range(25): loop.

I will update once it has undergone all it’s iterations…

UPDATE:

That hasn’t had an affect on improving training accuracy unfortunately, but thanks for pointed out that bug

Have you solved the problem? I meet the same problem, and i tried to change the network structure, learning rate and training strategy, it still dosen’t work.

my loss function is shown in the following picture:

Thanks in advance!

I could be wrong, but I do not think using the term ‘overfit’ is legit, both in your question and the post.