Hi! I am currently working to develop a model that can hopefully take an RGB image containing garbage and outputs a segmented image (i am hoping to segment it according to the 60 categories I defined). I used Adam as the optimizer and cross-entropy as the loss_function. During training, (I tried 10 epoch at most), the lowest loss value I got was 0.14. This is my training script:

BATCH_SIZE = 4

EPOCHS = 10

def train(model):

model.train()

for epoch in range(EPOCHS):

for i in tqdm(range(0, len(img_train), BATCH_SIZE)):

batch_img_train = img_train[i:i+BATCH_SIZE].view(-1, 3, 448, 448)

batch_mask_train = mask_train[i:i+BATCH_SIZE].view(-1, 1, 448, 448)

model.zero_grad()

outputs = model(batch_img_train)

loss = loss_function(outputs, batch_mask_train.squeeze(1).long())

loss.backward()

optimizer.step() # Does the update

print(f"Epoch: {epoch}. Loss: {loss}")

return batch_img_train, batch_mask_train, outputs

train(model)

while this is my validation script ( I also tried to print predictions here):

def test(model):

model.eval

correct = 0

total = 0

with torch.no_grad():

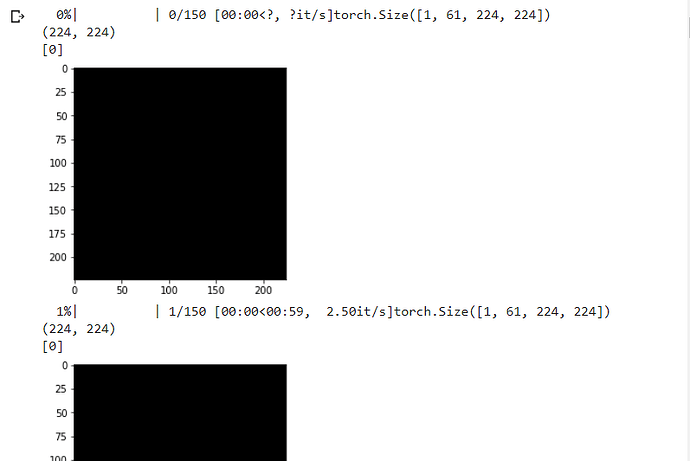

for i in tqdm(range(len(img_test))):

real_class = mask_test[20]

net_out = model(img_test[i].view(-1, 3, 224, 224))[0]

predicted_class = torch.argmax(net_out, 0)

prediction = predicted_class.eq(mask_test[20])

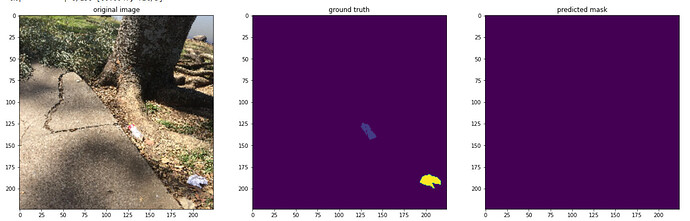

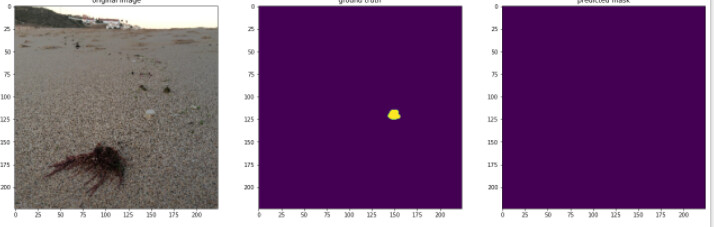

plt.figure(figsize=(22,8))

plt.subplot(1, 3, 1)

plt.imshow(img_test[20].view(-1, 224, 224, 3).detach().cpu().squeeze())

plt.title('original image')

plt.subplot(1, 3, 2)

plt.imshow(real_class.cpu().squeeze())

plt.title('ground truth')

plt.subplot(1, 3, 3)

plt.imshow(predicted_class.cpu().squeeze())

plt.title('predicted mask')

test(model)

but my predictions are way to false because my model tends to classify every pixel as backgroudn despite having a small loss value. I read about class imbalance and even tried implementing IoU in the training but I couldnt get it right. I am very confused right now, can someone please please help me?

For binary segmentation with high class imbalance, I believe the Dice loss or Intersection over Union loss are better than the Cross Entropy.

For binary segmentation with high class imbalance, I believe the Dice loss or Intersection over Union loss are better than the Cross Entropy.