Hello, I have been working on a Fashion MNIST hackathon and I have been using CNN architecture to train batches. However, one problem that I feel I am facing is that my network is overfitting the data. Can someone tell me if it is overfitting the data or is it okay to have 100% accuracy. What improvements I can make to improve the model. Here are the codes of both model architecture and the model training-results.

class ConvolutionalNetwork(nn.Module):

def init(self):

super().init()

self.conv1 = nn.Conv2d(3,6,3,1)

self.conv2 = nn.Conv2d(6,16,3,1)

self.fc1 = nn.Linear(5516,120)

self.fc2 = nn.Linear(120,84)

self.fc3= nn.Linear(84,10)def forward(self, X): X= f.relu(self.conv1(X)) X =f.max_pool2d(X,2,2) X= f.relu(self.conv2(X)) X= f.max_pool2d(X,2,2) X= X.view(-1,5*5*16) X= f.relu(self.fc1(X)) X= f.relu(self.fc2(X)) X= self.fc3(X) return f.log_softmax(X, dim=1)

Code for Training Batches:

import time

start_time = time.time()

epochs = 5

train_losses =

test_losses =

train_correct =

test_correct =for i in range(epochs):

trn_corr=0

tst_corr = 0#Run the training batches for b, (X_train,y_train) in enumerate(train_loader): b+=1 y_pred = model(X_train) loss= criterion(y_pred,y_train) predicted = torch.max(y_pred.data, 1)[1] batch_corr = (predicted==y_train).sum() trn_corr += batch_corr optimizer.zero_grad() loss.backward() optimizer.step() #Print interim results if b%600 == 0: print(f'epoch: {i:2} batch: {b:4} [{10*b:6}/60000] loss: {loss.item():10.8f} \accuracy: {trn_corr.item()100/(10b):7.3f}%')

loss=loss.detach().numpy() train_losses.append(loss) train_correct.append(trn_corr) #Run testing batches with torch.no_grad(): for b, (X_test,y_test) in enumerate(test_loader): b+=1 y_val = model(X_test) predicted = torch.max(y_val.data, 1)[1] tst_corr += (predicted==y_test).sum() loss = criterion(y_val,y_test) loss=loss.detach().numpy() test_losses.append(loss) test_correct.append(tst_corr)print(f"time_duration:{start_time-time.time()}")

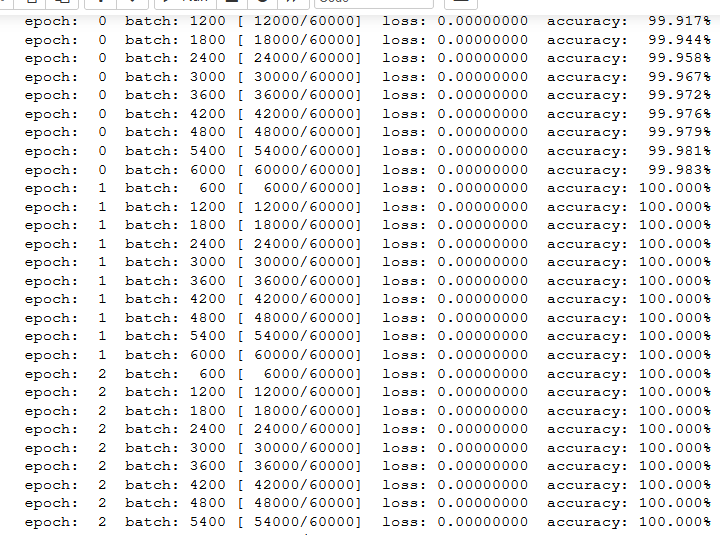

The results are in the form of images shown below:

.