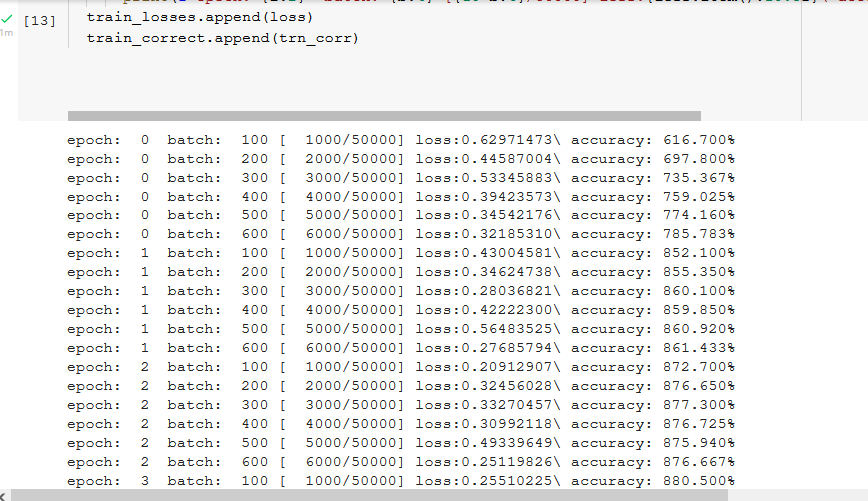

Hello, I hope everyone is fine. I am trying to train a simple neural network on a FASHION MNIST data-set. However, although its a simple neural network it overly over-fits the data-set giving an accuracy of almost 630%. I don’t know what is the problem. Every time I try to train a simple neural net. I get accuracy like this. Here is the code below. Please do have a look at it and give your valuable feedback.

I cannot see any behavior of overfitting in your post. Overfitting means that the training loss, accuracy or whatever is higher than the validation loss, accuracy.

I cannot see your entire code in the screenshot, but an accuracy greater than 1 is obviously not possible. A perfect classifier would achieve an accuracy of 1, because then every sample would be classified correctly. Also take a look at this Wikipedia entry.

Maybe you can take a look online at other tutorials how the accuracy can be calculated? Or you try it yourself given the referenced Wikipedia article, where the definition is listed.

but accuracy of 697% doesn’t this makes my neural net overfit the data-set?

The code line that prints the accuracy is as follows :

print(f’epoch: {i:2} batch: {b:4} [{10*b:6}/50000] loss:{loss.item():10.8f}\ accuracy: {trn_corr.item()100/(10b):7.3f}%')

Please identify if there is any error in this code? Thanks

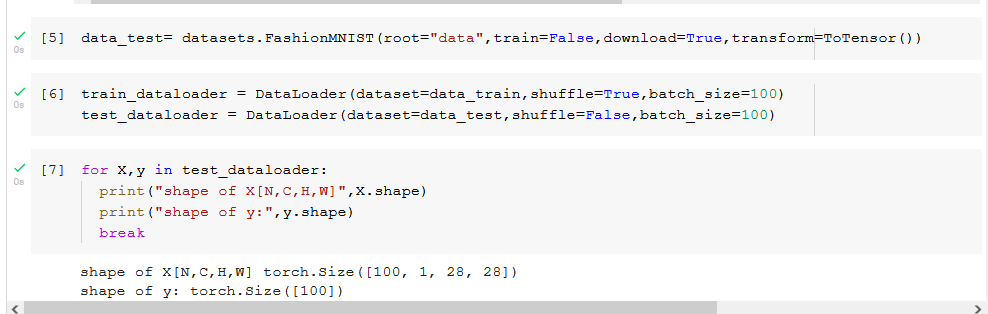

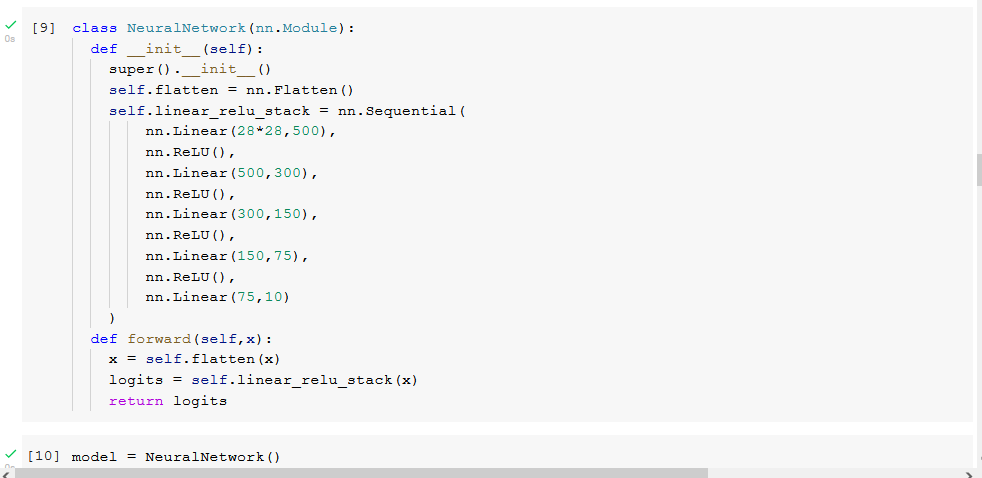

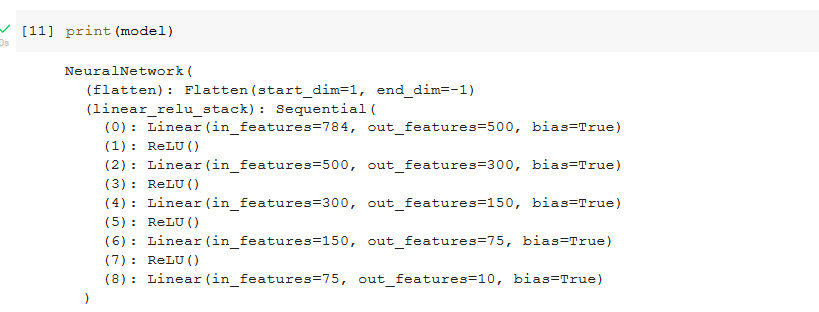

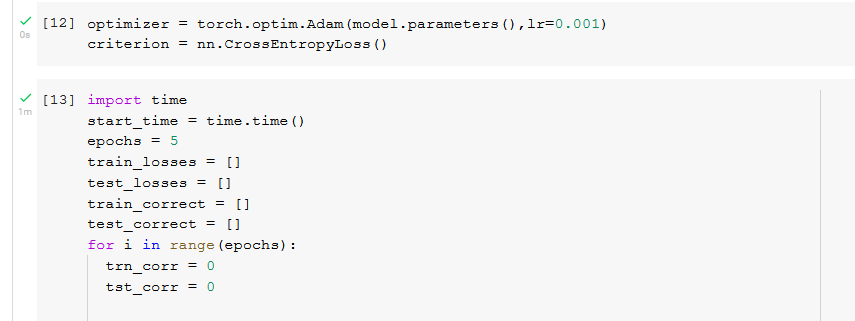

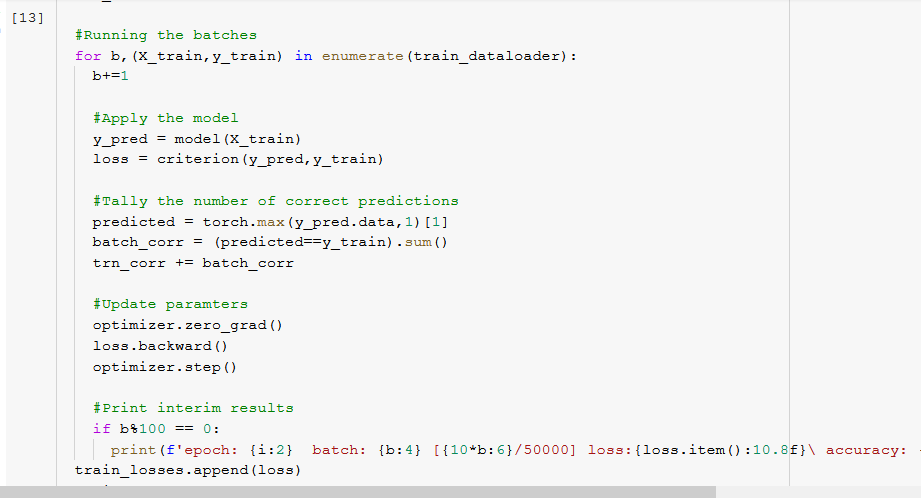

Here is the code for training the neural network; if you cannot see it properly in the above images:

import time

start_time = time.time()

epochs = 5

train_losses =

test_losses =

train_correct =

test_correct =

for i in range(epochs):

trn_corr = 0

tst_corr = 0

#Running the batches

for b,(X_train,y_train) in enumerate(train_dataloader):

b+=1 #Apply the model y_pred = model(X_train) loss = criterion(y_pred,y_train) #Tally the number of correct predictions predicted = torch.max(y_pred.data,1)[1] batch_corr = (predicted==y_train).sum() trn_corr += batch_corr #Update paramters optimizer.zero_grad() loss.backward() optimizer.step() #Print interim results if b%100 == 0: print(f'epoch: {i:2} batch: {b:4} [{10*b:6}/50000] loss:{loss.item():10.8f}\ accuracy: {trn_corr.item()*100/(10*b):7.3f}%')train_losses.append(loss)

train_correct.append(trn_corr)

You cannot read off from this value (which must be wrong, because the accuracy has an upper bound of 1) anything about overfitting. Please reread my last post and feel free to visit the references in there.

Could you please provide this print-statement in another form? So instead of an f-string (I am unfamiliar with them), could you please write it in this way:

print('Random float between 0 and 1: {:.2f}'.format(torch.rand(1).item())

When you write {:.2f}, Python will only print the first two decimal places.

And just to make sure: What are the shapes of X_train, Y_train and y_pred? So when you do

print('Shapes of training samples, predictions and labels: {} {} {}'.format(X_train.shape, y_pred.shape, y_train.shape))

what is the output?

Thank-you for your help, I actually detected the error. It was syntax error I had to write 10 instead of 100.