jack_S

April 21, 2023, 4:49pm

1

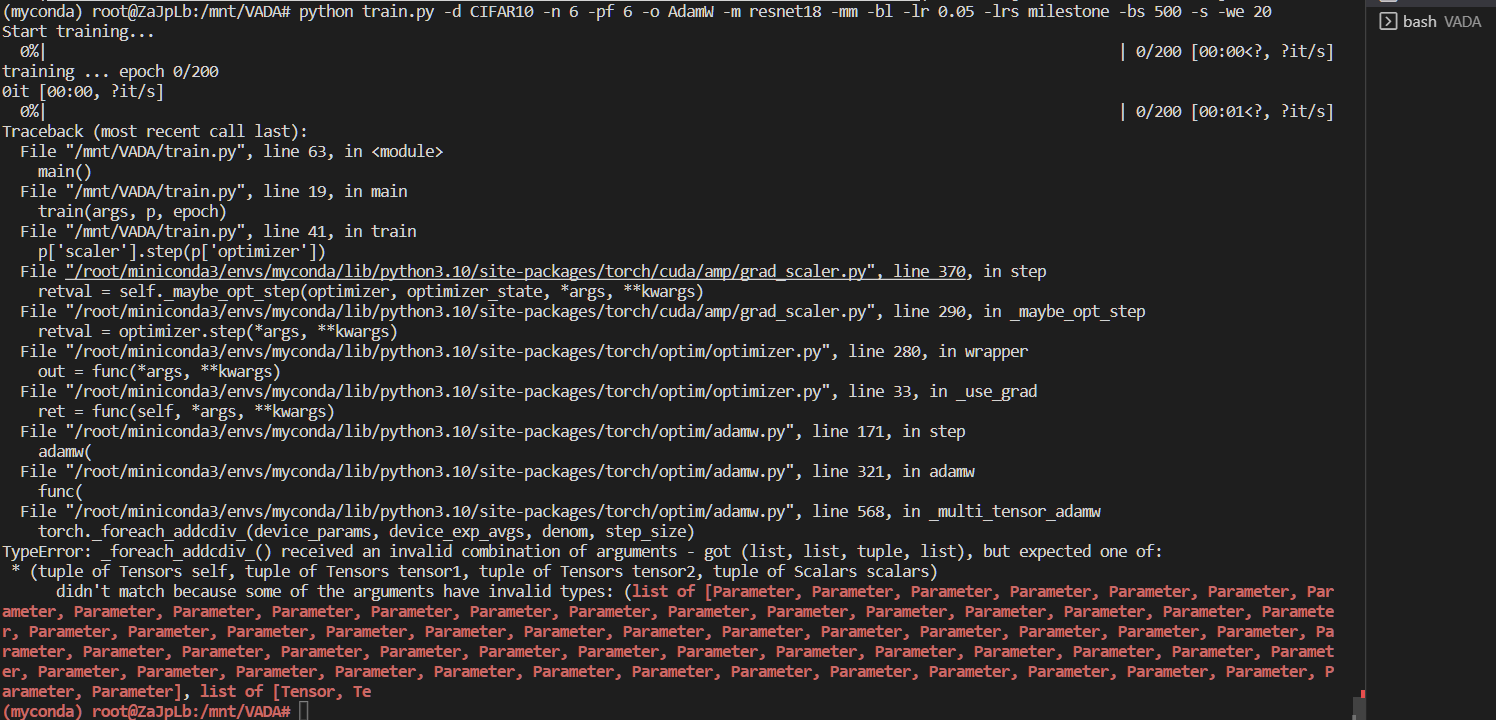

This happened when I trained

resnet18 with

CIFAR10. If I use

SGD, then the program can run normally. This is how I get

AdamW:

parameters = {'params':model.parameters(), 'lr':learning_rate}

parameters['weight_decay'] = 1e-4

optimizer = optim.AdamW(**parameters)

Could you post a minimal and executable code snippet to reproduce the issue, please?

jack_S

April 21, 2023, 6:58pm

3

Thank you! I found the crux. I set the learning rate in AdamW with a tensor. Here is the code:

import torch

from torch.optim import SGD, AdamW

from torch.cuda import amp

from torch.cuda.amp.grad_scaler import GradScaler

from torchvision import models

from torch.nn import functional as F

model = models.resnet18().cuda()

scaler = GradScaler()

L = torch.nn.CrossEntropyLoss(reduction = 'mean').cuda()

parameters = {'params':model.parameters(), 'lr':0.002}

parameters['weight_decay'] = 2e-5

optimizer = AdamW(**parameters) #SGD works fine

for param_group in optimizer.param_groups:

param_group['lr'] = torch.tensor(0.)

optimizer.zero_grad(True)

images = torch.randn(128, 3, 32, 32).cuda(non_blocking = True)

labels = F.one_hot(torch.randint(0, 9, (128,)), 10).type(torch.float32).cuda(non_blocking = True)

with amp.autocast():

output = model(images)

loss = L(output, labels)

scaler.scale(loss).backward()

scaler.step(optimizer)

scaler.update()

crcrpar

April 21, 2023, 8:09pm

4

Hi @jack_S ,

it looks a bit surprising to me that SGD works with Tensor learning rate considering the type hint of pytorch/sgd.py at fc63d710fee323eee8b135fd193ee37e9f06ed55 · pytorch/pytorch · GitHub where lr’s type hint is float.

One way to let AdamW with Tensor lr would be to pass fused=False, foreach=False to AdamW constructor.

Would you mind telling me why you want to have lr in Tensor?

jack_S

April 22, 2023, 4:56am

5

Well, it’s not very important for me to assign lr with a tensor. I can turn to float in other experiments if necessary. I did it just because I want to see what will happen if I set the lr casually. For example, if I want to set a random learning schedule for my training, I may code like below:

total_epochs = 200

lr_schedule = torch.normal(mean = 0.05, std = 0.01, size = (total_epochs, )).abs()

optimizer = AdamW(model.parameters(), 0.001)

for i in range(0, total_epochs):

for param_group in optimizer.param_groups:

param_group['lr'] = lr_schedule[i]

............

So this introduces tensor in lr.