Dear @ptrblck, yes, you are right, another error occurred before, here it is:

running egg_info

creating deform_conv.egg-info

writing deform_conv.egg-info/PKG-INFO

writing dependency_links to deform_conv.egg-info/dependency_links.txt

writing top-level names to deform_conv.egg-info/top_level.txt

writing manifest file 'deform_conv.egg-info/SOURCES.txt'

reading manifest file 'deform_conv.egg-info/SOURCES.txt'

writing manifest file 'deform_conv.egg-info/SOURCES.txt'

running build_ext

building 'deform_conv_cuda' extension

creating /media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/build

creating /media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/build/temp.linux-x86_64-3.7

creating /media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/build/temp.linux-x86_64-3.7/src

Emitting ninja build file /media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/build/temp.linux-x86_64-3.7/build.ninja...

Compiling objects...

Allowing ninja to set a default number of workers... (overridable by setting the environment variable MAX_JOBS=N)

[1/2] c++ -MMD -MF /media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/build/temp.linux-x86_64-3.7/src/deform_conv_cuda.o.d -pthread -B /home/ml/miniconda3/envs/nasrin/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/include -I/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/include/torch/csrc/api/include -I/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/include/TH -I/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/include/THC -I/usr/local/cuda/include -I/home/ml/miniconda3/envs/nasrin/include/python3.7m -c -c /media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda.cpp -o /media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/build/temp.linux-x86_64-3.7/src/deform_conv_cuda.o -DTORCH_API_INCLUDE_EXTENSION_H -DTORCH_EXTENSION_NAME=deform_conv_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

FAILED: /media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/build/temp.linux-x86_64-3.7/src/deform_conv_cuda.o

c++ -MMD -MF /media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/build/temp.linux-x86_64-3.7/src/deform_conv_cuda.o.d -pthread -B /home/ml/miniconda3/envs/nasrin/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/include -I/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/include/torch/csrc/api/include -I/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/include/TH -I/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/include/THC -I/usr/local/cuda/include -I/home/ml/miniconda3/envs/nasrin/include/python3.7m -c -c /media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda.cpp -o /media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/build/temp.linux-x86_64-3.7/src/deform_conv_cuda.o -DTORCH_API_INCLUDE_EXTENSION_H -DTORCH_EXTENSION_NAME=deform_conv_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

cc1plus: warning: command line option ‘-Wstrict-prototypes’ is valid for C/ObjC but not for C++

/media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda.cpp: In function ‘void shape_check(at::Tensor, at::Tensor, at::Tensor*, at::Tensor, int, int, int, int, int, int, int, int, int, int)’:

/media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda.cpp:68:31: error: ‘AT_CHECK’ was not declared in this scope

weight.ndimension());

^

/media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda.cpp: In function ‘int deform_conv_forward_cuda(at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, int, int, int, int, int, int, int, int, int, int, int)’:

/media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda.cpp:192:73: error: ‘AT_CHECK’ was not declared in this scope

AT_CHECK((offset.size(0) == batchSize), "invalid batch size of offset");

^

/media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda.cpp: In function ‘int deform_conv_backward_input_cuda(at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, int, int, int, int, int, int, int, int, int, int, int)’:

/media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda.cpp:298:76: error: ‘AT_CHECK’ was not declared in this scope

AT_CHECK((offset.size(0) == batchSize), 3, "invalid batch size of offset");

^

/media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda.cpp: In function ‘int deform_conv_backward_parameters_cuda(at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, int, int, int, int, int, int, int, int, int, int, float, int)’:

/media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda.cpp:413:73: error: ‘AT_CHECK’ was not declared in this scope

AT_CHECK((offset.size(0) == batchSize), "invalid batch size of offset");

^

/media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda.cpp: In function ‘void modulated_deform_conv_cuda_forward(at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, int, int, int, int, int, int, int, int, int, int, bool)’:

/media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda.cpp:493:70: error: ‘AT_CHECK’ was not declared in this scope

AT_CHECK(input.is_contiguous(), "input tensor has to be contiguous");

^

/media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda.cpp: In function ‘void modulated_deform_conv_cuda_backward(at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, at::Tensor, int, int, int, int, int, int, int, int, int, int, bool)’:

/media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda.cpp:574:70: error: ‘AT_CHECK’ was not declared in this scope

AT_CHECK(input.is_contiguous(), "input tensor has to be contiguous");

^

[2/2] /usr/local/cuda/bin/nvcc -I/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/include -I/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/include/torch/csrc/api/include -I/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/include/TH -I/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/include/THC -I/usr/local/cuda/include -I/home/ml/miniconda3/envs/nasrin/include/python3.7m -c -c /media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda_kernel.cu -o /media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/build/temp.linux-x86_64-3.7/src/deform_conv_cuda_kernel.o -D__CUDA_NO_HALF_OPERATORS__ -D__CUDA_NO_HALF_CONVERSIONS__ -D__CUDA_NO_HALF2_OPERATORS__ --expt-relaxed-constexpr --compiler-options '-fPIC' -D__CUDA_NO_HALF_OPERATORS__ -D__CUDA_NO_HALF_CONVERSIONS__ -D__CUDA_NO_HALF2_OPERATORS__ -DTORCH_API_INCLUDE_EXTENSION_H -DTORCH_EXTENSION_NAME=deform_conv_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -gencode=arch=compute_61,code=sm_61 -std=c++14

/media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda_kernel.cu: In lambda function:

/media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda_kernel.cu:258:247: warning: ‘T* at::Tensor::data() const [with T = double]’ is deprecated: Tensor.data<T>() is deprecated. Please use Tensor.data_ptr<T>() instead. [-Wdeprecated-declarations]

/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/include/ATen/core/TensorBody.h:341:1: note: declared here

T * data() const {

^

/media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda_kernel.cu:258:310: warning: ‘T* at::Tensor::data() const [with T = double]’ is deprecated: Tensor.data<T>() is deprecated. Please use Tensor.data_ptr<T>() instead. [-Wdeprecated-declarations]

/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/include/ATen/core/TensorBody.h:341:1: note: declared here

T * data() const {

^

/media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda_kernel.cu:258:361: warning: ‘T* at::Tensor::data() const [with T = double]’ is deprecated: Tensor.data<T>() is deprecated. Please use Tensor.data_ptr<T>() instead. [-Wdeprecated-declarations]

/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/include/ATen/core/TensorBody.h:341:1: note: declared here

T * data() const {

.

.

.

/media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda_kernel.cu:845:2264: warning: ‘T* at::Tensor::data() const [with T = c10::Half]’ is deprecated: Tensor.data<T>() is deprecated. Please use Tensor.data_ptr<T>() instead. [-Wdeprecated-declarations]

/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/include/ATen/core/TensorBody.h:341:1: note: declared here

T * data() const {

^

/media/ml/datadrive2/Nasrin/EDVR-master/codes/models/archs/dcn/src/deform_conv_cuda_kernel.cu:845:2320: warning: ‘T* at::Tensor::data() const [with T = c10::Half]’ is deprecated: Tensor.data<T>() is deprecated. Please use Tensor.data_ptr<T>() instead. [-Wdeprecated-declarations]

/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/include/ATen/core/TensorBody.h:341:1: note: declared here

T * data() const {

^

ninja: build stopped: subcommand failed.

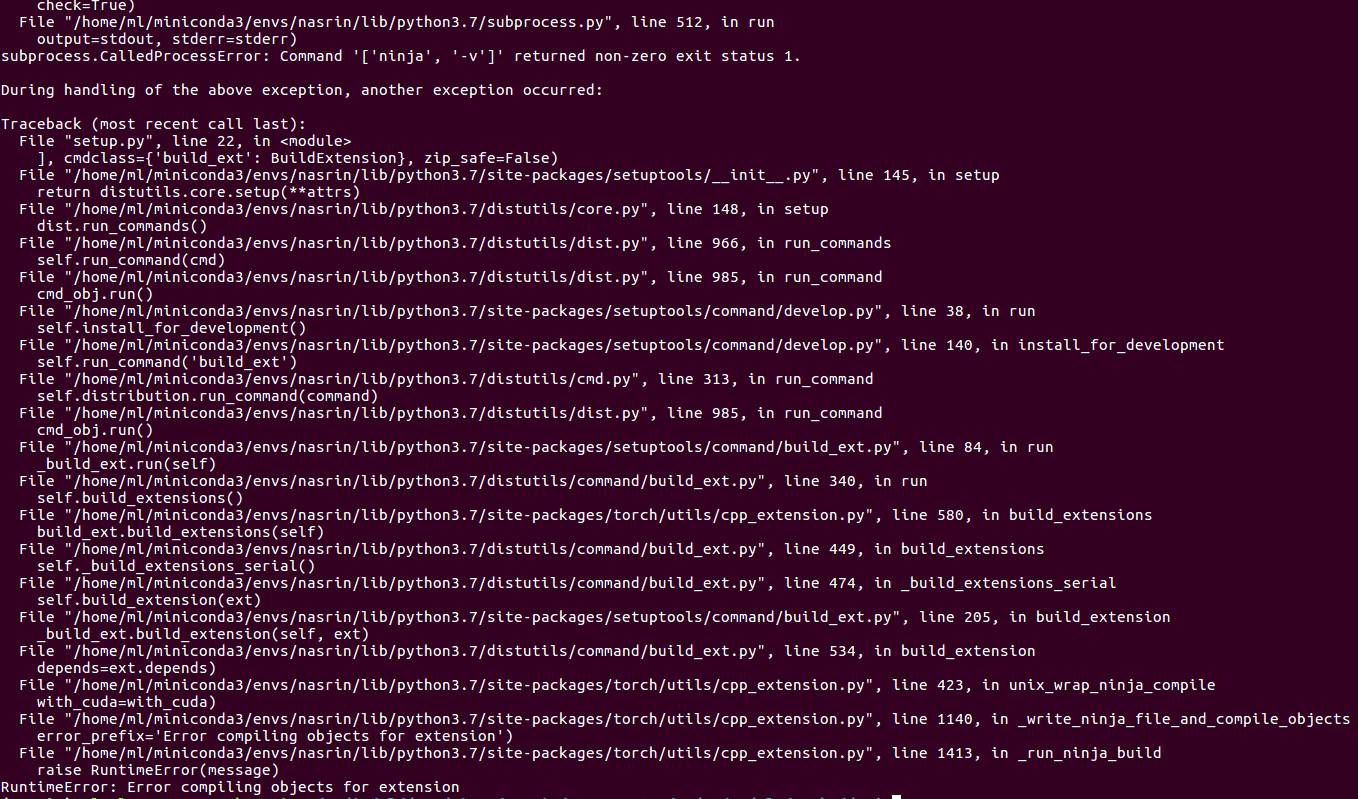

Traceback (most recent call last):

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/utils/cpp_extension.py", line 1400, in _run_ninja_build

check=True)

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/subprocess.py", line 512, in run

output=stdout, stderr=stderr)

subprocess.CalledProcessError: Command '['ninja', '-v']' returned non-zero exit status 1.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "setup.py", line 22, in <module>

], cmdclass={'build_ext': BuildExtension}, zip_safe=False)

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/setuptools/__init__.py", line 145, in setup

return distutils.core.setup(**attrs)

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/distutils/core.py", line 148, in setup

dist.run_commands()

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/distutils/dist.py", line 966, in run_commands

self.run_command(cmd)

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/distutils/dist.py", line 985, in run_command

cmd_obj.run()

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/setuptools/command/develop.py", line 38, in run

self.install_for_development()

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/setuptools/command/develop.py", line 140, in install_for_development

self.run_command('build_ext')

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/distutils/cmd.py", line 313, in run_command

self.distribution.run_command(command)

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/distutils/dist.py", line 985, in run_command

cmd_obj.run()

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/setuptools/command/build_ext.py", line 84, in run

_build_ext.run(self)

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/distutils/command/build_ext.py", line 340, in run

self.build_extensions()

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/utils/cpp_extension.py", line 580, in build_extensions

build_ext.build_extensions(self)

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/distutils/command/build_ext.py", line 449, in build_extensions

self._build_extensions_serial()

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/distutils/command/build_ext.py", line 474, in _build_extensions_serial

self.build_extension(ext)

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/setuptools/command/build_ext.py", line 205, in build_extension

_build_ext.build_extension(self, ext)

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/distutils/command/build_ext.py", line 534, in build_extension

depends=ext.depends)

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/utils/cpp_extension.py", line 423, in unix_wrap_ninja_compile

with_cuda=with_cuda)

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/utils/cpp_extension.py", line 1140, in _write_ninja_file_and_compile_objects

error_prefix='Error compiling objects for extension')

File "/home/ml/miniconda3/envs/nasrin/lib/python3.7/site-packages/torch/utils/cpp_extension.py", line 1413, in _run_ninja_build

raise RuntimeError(message)

RuntimeError: Error compiling objects for extension