I’m working on a binary classification of 446x2048 images, but my test accuracy is stuck at 6% until 1100 epochs in, when it switches to 93%. I’ve tried a few different fixes, but none have worked for me.

Since 6% of my test images are defects and 93% are good, I think after the 1100 epochs the model is just switching from saying everything is a defect to saying everything is not a defect. I have a very limited data set and I cannot obtain more defect images.

Here’s the code:

import os

import torch

import torch.nn as nn

import torchvision

from torchvision import datasets, transforms

from torchvision.transforms import transforms

from torch.utils.data import DataLoader

from torch.optim import Adam

import matplotlib.pyplot as plt

import numpy as np

import torch.nn.functional as F

os.environ["PYTORCH_CUDA_ALLOC_CONF"] = "max_split_size_mb:64"

torch.cuda.empty_cache()

# Loading and normalizing the data.

# Define transformations for the training and test sets

transformations = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

])

batch_size = 10

number_of_labels = 2

data_dir = "D:/Cropped Copper Plates/Edge Strips"

# Load training data

train_dir = os.path.join(data_dir, "Training")

train_set = datasets.ImageFolder(train_dir, transform=transformations)

train_loader = torch.utils.data.DataLoader(train_set, batch_size=batch_size, shuffle=True, num_workers=0)

# Load testing data

test_dir = os.path.join(data_dir, "Testing")

test_set = datasets.ImageFolder(test_dir, transform=transformations)

test_loader = torch.utils.data.DataLoader(test_set, batch_size=batch_size, shuffle=False, num_workers=0)

print("The number of images in a training set is: ", len(train_loader)*batch_size)

print("The number of images in a test set is: ", len(test_loader)*batch_size)

print("The number of batches per epoch is: ", len(train_loader))

classes = ('Defect', 'No_Defect')

class Network(nn.Module):

def __init__(self):

super(Network, self).__init__()

self.conv1 = nn.Conv2d(in_channels=3, out_channels=16, kernel_size=3)

self.bn1 = nn.BatchNorm2d(16)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(16, 32, kernel_size=3)

self.drop = nn.Dropout2d()

self.bn2 = nn.BatchNorm2d(32)

self.fc1 = nn.Linear(7187840, 1)

self.fc2 = nn.Linear(512, 2)

def forward(self, x):

x = F.relu(self.bn1(self.conv1(x)))

x = self.pool(x)

x = F.relu(self.bn2(self.drop(self.conv2(x))))

x = self.drop(x)

x = x.view(x.size(0), -1)

#print(x.shape)

x = F.relu(self.fc1(x))

x = F.dropout(x, training = self.training)

#x = self.fc2(x)

return x

# Instantiate a neural network model

model = Network()

# Define the loss function with Classification Cross-Entropy loss and an optimizer with Adam optimizer

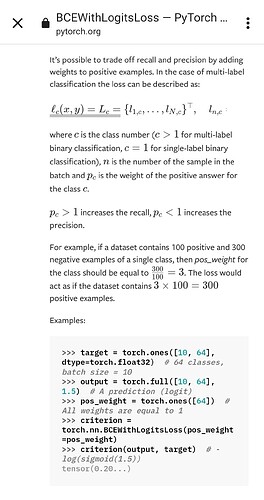

loss_fn = nn.BCEWithLogitsLoss()

optimizer = torch.optim.SGD(model.parameters(), lr=0.1, weight_decay=0.0001)

from torch.autograd import Variable

# Function to save the model

def saveModel():

path = "./myFirstModel.pth"

torch.save(model.state_dict(), path)

# Function to test the model with the test dataset and print the accuracy for the test images

def testAccuracy():

model.eval()

accuracy = 0.0

total = 0.0

with torch.no_grad():

for data in test_loader:

images, labels = data

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

images, labels = images.to(device), labels.to(device)

images = images.float()

labels = labels.float()

# run the model on the test set to predict labels

outputs = model(images)

# the label with the highest energy will be our prediction

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

accuracy += (predicted == labels).sum().item()

# compute the accuracy over all test images

accuracy = (100 * accuracy / total)

return(accuracy)

# Training function. We simply have to loop over our data iterator and feed the inputs to the network and optimize.

def train(num_epochs):

best_accuracy = 0.0

# Define your execution device

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print("The model will be running on", device, "device")

# Convert model parameters and buffers to CPU or Cuda

model.to(device)

for epoch in range(num_epochs): # loop over the dataset multiple times

running_loss = 0.0

running_acc = 0.0

for i, (images, labels) in enumerate(train_loader, 0):

# get the inputs

images = Variable(images.to(device))

labels = Variable(labels.to(device))

# zero the parameter gradients

optimizer.zero_grad()

# predict classes using images from the training set

outputs = model(images)

#labels = labels.type(torch.FloatTensor)

#labels = labels.reshape((labels.shape[0], 2))

labels = labels.unsqueeze(1)

labels = labels.float()

outputs = outputs.float()

# compute the loss based on model output and real labels

loss = loss_fn(outputs, labels)

# backpropagate the loss

loss.backward()

# adjust parameters based on the calculated gradients

optimizer.step()

# Let's print statistics for every 1,000 images

running_loss += loss.item() # extract the loss value

if i % 100 == 99:

# print every 100 (twice per epoch)

print('[%d, %5d] loss: %.3f' %

(epoch + 1, i + 1, running_loss / 100))

# zero the loss

running_loss = 0.0

# Compute and print the average accuracy fo this epoch when tested over all 10000 test images

accuracy = testAccuracy()

print('For epoch', epoch+1,'the test accuracy over the whole test set is %d %%' % (accuracy))

# we want to save the model if the accuracy is the best

if accuracy > best_accuracy:

saveModel()

best_accuracy = accuracy

# Function to show the images

def imageshow(img):

img = img / 2 + 0.5 # unnormalize

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

plt.show()

# Function to test the model with a batch of images and show the labels predictions

def testBatch():

# get batch of images from the test DataLoader

images, labels = next(iter(test_loader))

# show all images as one image grid

#imageshow(torchvision.utils.make_grid(images))

# Show the real labels on the screen

print('Real labels: ', ' '.join('%5s' % classes[labels[j]]

for j in range(batch_size)))

# Let's see what if the model identifiers the labels of those example

outputs = model(images)

# We got the probability for every 10 labels. The highest (max) probability should be correct label

_, predicted = torch.max(outputs, 1)

# Let's show the predicted labels on the screen to compare with the real ones

print('Predicted: ', ' '.join('%5s' % classes[predicted[j]]

for j in range(batch_size)))

if __name__ == "__main__":

# Let's build our model

train(2000)

print('Finished Training')

# Test which classes performed well

testAccuracy()

# Let's load the model we just created and test the accuracy per label

model = Network()

path = "myFirstModel.pth"

model.load_state_dict(torch.load(path))

# Test with batch of images

testBatch()