I am trying to do NN based on this article http://nlp.seas.harvard.edu/2018/04/03/attention.html

Or based on the standard nn.transformer module

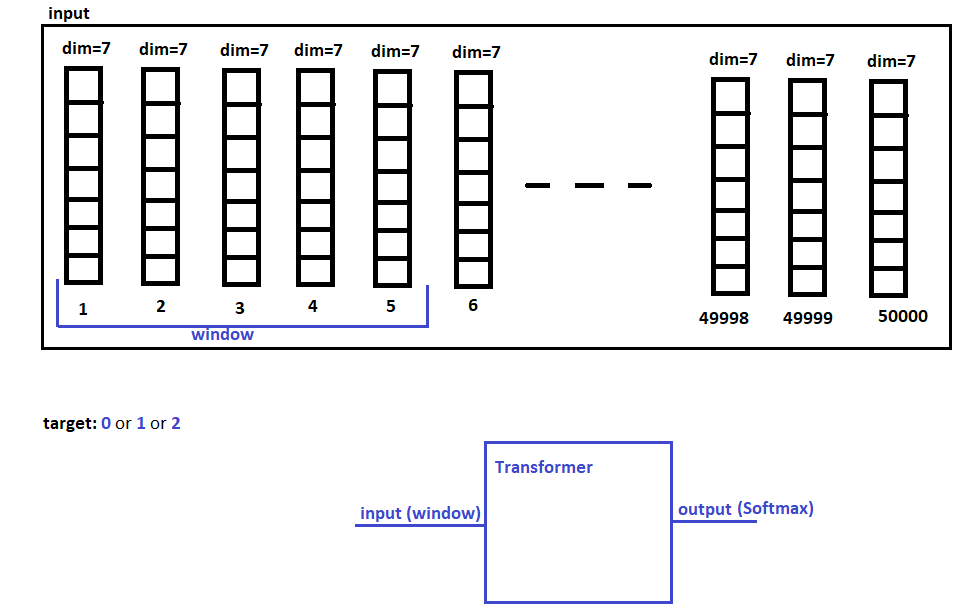

I have numbers instead of words. The size of one sequence is 5. At the output(I have three neurons), I need to predict three values (0, 1, 2). That is, at each step I take a window of size 5 and predict 0 or 1 or 2. In fact, this is a classification into 3 classes. Can I use the standard nn.transformer module and use my data instead of words? Or what do you advise me to do?

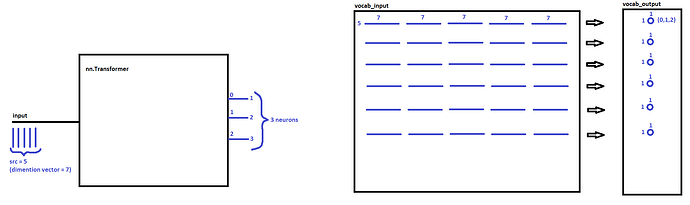

If you consider my example based on the nn.transformer module

Instead of words, I use a vector of numbers of size 7. I want to send this vector instead of the word directly to the nn.transformer module

The size of my sequence is always 5.

How can I make a simple example to check the functionality of the code? I do not understand what E. is

import torch

import torch.nn as nn

class Trans(nn.Module):

def __init__(self, src_vocab, tgt_vocab):

super(Trans, self).__init__()

self.tr = nn.Transformer(src_vocab, tgt_vocab)

def forward(self, src, tgt):

out = self.tr(src, tgt)

return out

net = Trans(5,1) #src=5 (window), tgt=1 ('0' or '1' or '2')

sr = torch.randn(5, 1, 7) #src: (S, N, E) E-??

tg = torch.tensor([0], dtype=torch.long) #tgt: (T, N, E)

outputs = net(sr, tg) #output: (T, N, E)