I am learning the object detection fine-tuning tutorial in the pytorch tutorial, and there is the case that loss is nan during the training.

This is my code:

anno={'image':image,'bboxes':[box],'id':[1]}

aug=self.transform(**anno)

image=torch.from_numpy(aug['image']).permute(2,0,1).float()

box=torch.as_tensor(np.array(aug['bboxes']),dtype=torch.float32)

labels=torch.ones(1,dtype=torch.int64)

image_id=torch.tensor([index])

area=(box[:,3]-box[:,1])*(box[:,2]-box[:,0])

iscrowd=torch.zeros(1,dtype=torch.uint8)

target={}

target['boxes']=box

target['labels']=labels

target['image_id']=image_id

target['area']=area

target['iscrowd']=iscrowd

print(box)

return image,target

from torchvision.models.detection.faster_rcnn import FastRCNNPredictor

model=torchvision.models.detection.fasterrcnn_resnet50_fpn(pretrained=True)

num_class=2

in_features=model.roi_heads.box_predictor.cls_score.in_features

model.roi_heads.box_predictor=FastRCNNPredictor(in_features,num_class)

print(model)

num_epoch=20

params=[p for p in model.parameters() if p.requires_grad]

optimizer=torch.optim.SGD(params,lr=0.005,momentum=0.9,weight_decay=0.0005)

lr_scheduler=torch.optim.lr_scheduler.StepLR(optimizer,step_size=3,gamma=0.1)

device = torch.device('cuda') if torch.cuda.is_available() else torch.device('cpu')

model.to(device)

for epoch in range(num_epoch):

train_one_epoch(model,optimizer,train_dl,device,epoch,print_freq=10)

lr_scheduler.step()

evaluate(model,valid_dl,device)

model_path=path/'box.pt'

model.save(model.state_dict(),model_path)

Basically all the processes follow the tutorial!

While I was looking for a solution, I saw that it might be box’s xim>xmax

So I printed the box, but it didn’t happen!

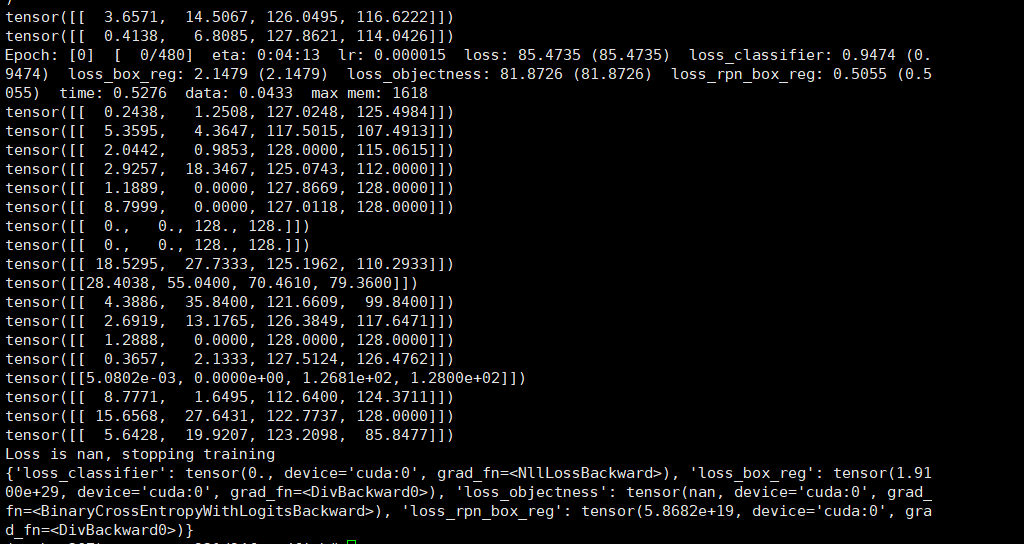

Here’s what’s wrong:

What should I do?