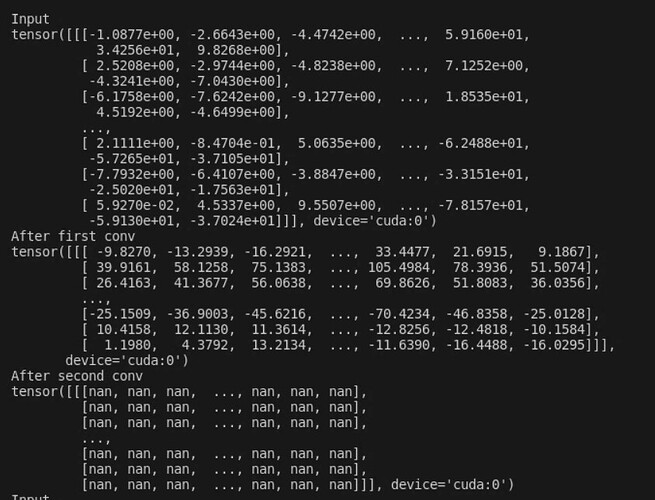

I have been working on a model for voice cloning, but I can’t make it train because I get Nan values suddenly when training. Please help!!! However, when I print the L1 Loss which is the one that turns Nan because of the input, it runs more epochs! But the progress spectrogram doesn’t look good.

These are the Layers that cause the Nan Values:

class DecConvBlock(nn.Module):

def __init__(self,

input_size,

in_hidden_size,

dec_kernel_size,

gen_kernel_size,

**kwargs):

super(DecConvBlock,self).__init__(**kwargs)

self.dec_conv = ConvModule(

input_size,

in_hidden_size,

dec_kernel_size)

self.gen_conv = ConvModule(

input_size,

in_hidden_size,

gen_kernel_size)

def forward(self, x):

print("Input")

print(x)

y = self.dec_conv(x)

print("After first conv")

print(y)

y = y + self.gen_conv(y)

print("After second conv")

print(y)

return x + y

class ConvModule(nn.Module):

def __init__(self, input_size, filter_size, kernel_size, **kwargs):

super(ConvModule,self).__init__(**kwargs)

self.seq = nn.Sequential(

ConvNorm(input_size,filter_size,kernel_size),

nn.BatchNorm1d(filter_size),

nn.LeakyReLU(),

ConvNorm(filter_size,input_size,kernel_size)

)

def forward(self,x):

return self.seq(x)

class ConvNorm(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size=1, stride=1,

padding='same', dilation=1, groups=1, bias=True, initializer_range=0.02, padding_mode='zeros'):

super(ConvNorm, self).__init__()

self.conv = nn.Conv1d(in_channels, out_channels,

kernel_size=kernel_size, stride=stride,

padding=padding, dilation=dilation,

groups=groups,

bias=bias, padding_mode=padding_mode)

nn.init.trunc_normal_(

self.conv.weight, std=initializer_range)

def forward(self, signal):

conv_signal = self.conv(signal)

return conv_signal