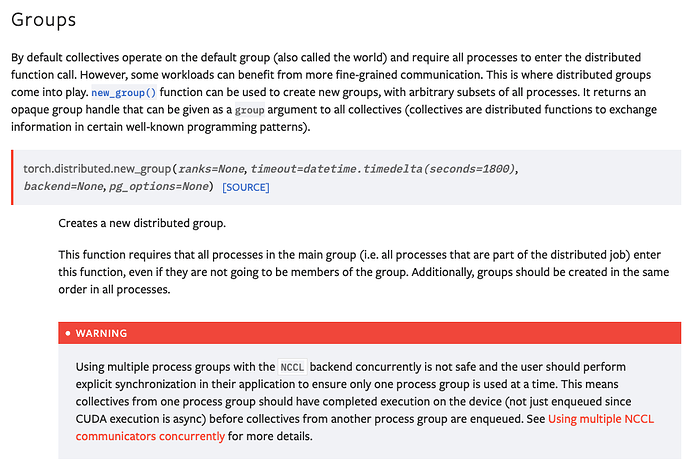

On this page, there is a warning that “Using multiple process groups with the NCCL backend concurrently is not safe”

Does this mean that assigning one specific task to multiple process groups is unsafe? If so, then would switching from NCCL to Gloo be a good solution?

Or does it mean that having multiple ProcessGroups is unsafe, even if all the process groups are disjoint sets with no overlapping elements?

You can use multiple ProcessGroups in NCCL (and it’s also done in some LLM training scripts), but would need to make sure to properly synchronize these allowing them to finish their execution to avoid deadlocks.

I don’t know enough about the Gloo backend and its limitations.

Thanks so much for your response and your help!

To be clear, the synchronization is necessary even if the process groups are completely disjoint sets of ranks, right?

Does that mean that the code in this answer on the pytorch forum should be modified to add explicit synchronization?

In that answer, there are multiple process groups that are simultaneously communicating, but there isn’t explicit synchronization to ensure that one process group finishes before the next one starts.

If I understand correctly, the code should be modified to

import torch

import torch.multiprocessing as mp

import os

def run(rank, world_size):

os.environ['MASTER_ADDR'] = 'localhost'

os.environ['MASTER_PORT'] = '29500'

torch.cuda.set_device(rank)

# global group

torch.distributed.init_process_group(backend='nccl', rank=rank, world_size=world_size)

g0 = torch.distributed.new_group(ranks=[0,1,2,3])

g1 = torch.distributed.new_group(ranks=[4,5,6,7])

# tensor to bcast over group

t = torch.tensor([1]).float().cuda().fill_(rank)

if rank < 4:

torch.distributed.all_reduce(t, group=g0)

torch.distributed.barrier()

if rank > 4:

torch.distributed.all_reduce(t, group=g1)

print('rank: {} - val: {}'.format(rank, t.item()))

def main():

world_size = 8

mp.spawn(run,

args=(world_size,),

nprocs=world_size,

join=True)

if __name__=="__main__":

main()

The only change I made is adding the torch.distributed.barrier() after the first process group is done. Then I changed the else to another if statement indicating the opposite of the first.

Your use case looks alright as something like this could deadlock:

# rank 0

dist.all_reduce(x, group1)

dist.all_reduce(y, group2)

#rank 1

dist.all_reduce(x, group2)

dist.all_reduce(y, group1)

More information can be found in the NCCL docs.