I am trying to reproduce the Credit Card Fraud Detection using autoencoders done in this git repo (https://github.com/curiousily/Credit-Card-Fraud-Detection-using-Autoencoders-in-Keras) and my network looks like :

class Autoencoder(nn.Module):

def init(self):

super(Autoencoder, self).init()

self.encoder = nn.Sequential(

nn.Linear(29, 14),

nn.Tanh(),

nn.Linear(14, 7),

nn.ReLU())

self.decoder = nn.Sequential(

nn.Linear(7, 14),

nn.Tanh(),

nn.Linear(14, 29),

nn.ReLU())

def forward(self, x):

x = self.encoder(x)

x = self.decoder(x)

return x

My training loop looks like

for epoch in range(num_epochs):

for data in train_loader:

#print(type(data))

#data = Variable(data).cpu()

#print(type(data))

# ===================forward=====================

output = model(data)

loss = criterion(output, data)

# ===================backward====================

optimizer.zero_grad()

loss.backward()

optimizer.step()

# ===================log========================

print('epoch [{}/{}], loss:{:.4f}'

.format(epoch + 1, num_epochs, loss.item()))

history['train_loss'].append(loss.item())

torch.save(model.state_dict(), ‘./credit_card_model.pth’)

Yet my training loss behaviour is completely different to what has been achieved using Keras. In my case it looks like model is not learning at all, whereas in case of Keras, there is a smooth degradation of training loss. Can somebody have a look at what I am doing wrong. All other steps like data prep, preprocessing etc are exactly same.

Thx

Vimal

Based on this Keras model definition:

input_layer = Input(shape=(input_dim, ))

encoder = Dense(encoding_dim, activation="tanh",

activity_regularizer=regularizers.l1(10e-5))(input_layer)

encoder = Dense(int(encoding_dim / 2), activation="relu")(encoder)

decoder = Dense(int(encoding_dim / 2), activation='tanh')(encoder)

decoder = Dense(input_dim, activation='relu')(decoder)

autoencoder = Model(inputs=input_layer, outputs=decoder)

if looks like your decoder is wrong and should be:

self.decoder = nn.Sequential(

nn.Linear(7, 7)

nn.Tanh(),

nn.Linear(7, 29)

nn.ReLU()

)

Could you check that and let me know, if I’m misunderstanding the Keras code.

Trouble is even with that I see lot of divergence in the first few epochs itself

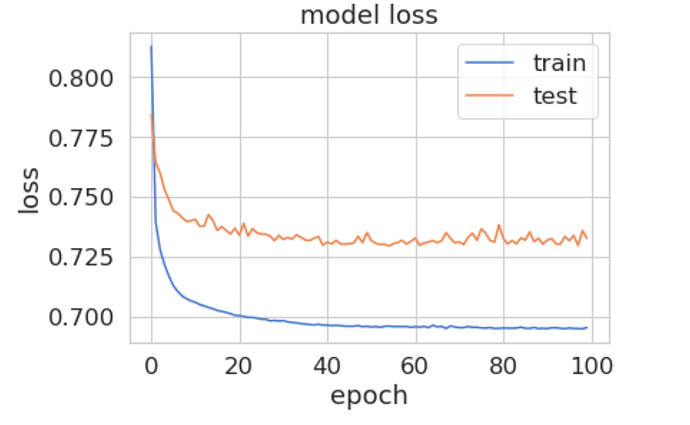

whereas with keras I get this

Epoch 1/100

227451/227451 [==============================] - 12s 53us/step - loss: 0.8129 - acc: 0.5749 - val_loss: 0.7844 - val_acc: 0.6528

Epoch 2/100

227451/227451 [==============================] - 11s 50us/step - loss: 0.7395 - acc: 0.6744 - val_loss: 0.7644 - val_acc: 0.6766

Epoch 3/100

227451/227451 [==============================] - 11s 50us/step - loss: 0.7279 - acc: 0.6842 - val_loss: 0.7599 - val_acc: 0.6891

Epoch 4/100

227451/227451 [==============================] - 11s 50us/step - loss: 0.7217 - acc: 0.6943 - val_loss: 0.7530 - val_acc: 0.6989

Epoch 5/100

227451/227451 [==============================] - 11s 50us/step - loss: 0.7169 - acc: 0.7050 - val_loss: 0.7488 - val_acc: 0.7073

Epoch 6/100

227451/227451 [==============================] - 11s 50us/step - loss: 0.7129 - acc: 0.7113 - val_loss: 0.7442 - val_acc: 0.7064

Epoch 7/100

227451/227451 [==============================] - 11s 50us/step - loss: 0.7105 - acc: 0.7130 - val_loss: 0.7432 - val_acc: 0.7029

Epoch 8/100

227451/227451 [==============================] - 11s 50us/step - loss: 0.7086 - acc: 0.7162 - val_loss: 0.7413 - val_acc: 0.7142

Epoch 9/100

227451/227451 [==============================] - 12s 51us/step - loss: 0.7074 - acc: 0.7172 - val_loss: 0.7398 - val_acc: 0.7151

Epoch 10/100

227451/227451 [==============================] - 11s 50us/step - loss: 0.7065 - acc: 0.7165 - val_loss: 0.7400 - val_acc: 0.7114

So with Keras, I amseeing a nice smooth fall in training loss.

Training is about to finish I will post the loss curve for both

Is Keras printing the training loss for the current batch or is the average taken?

The loss in your PyTorch model also seems to go down, but note that you are only printing the loss of the last seen training batch.

The usage of an AverageMeterfrom the ImageNet example might smooth the values out a bit.

Curve from Keras training (look at the blue color one :

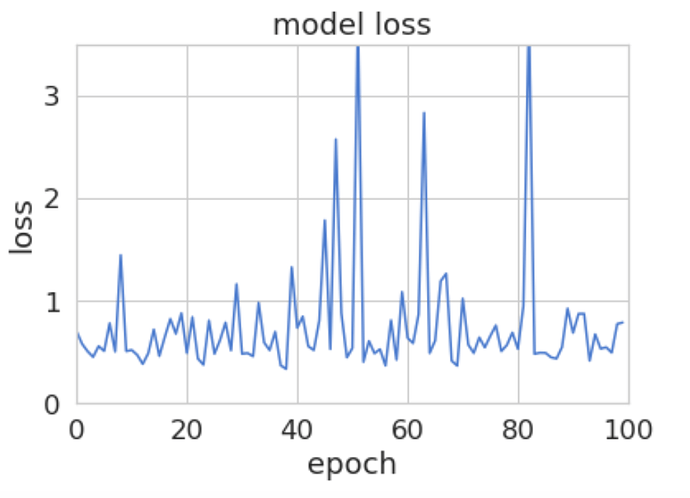

And the one from PyTorch :

Keras documentation says :

"history object records training loss values at successive epochs " So given this definition, I believe it is not the average. I am really wondering now that why should the behaviour of 2 simple networks represented in two different frameworks be so different.

I think may be you are right. Keras does seem to do some funky stuff in its model.fit metod - I just had a cursory glance. I will dig it up tomorrow morning . In the mean time,

The differences might come from a lot of sources:

- different model architectur

- different parameter initialization

- regularization

- optimizer

I would start by comparing the initialization methods and write a custom init method for your PyTorch model if necessary.

Also, I’m not sure what activity_regularizer=regularizers.l1(10e-5) means in your Keras model.

Does it take the L1-norm of the output activations and add it to the loss?

Actually in Keras code I set activity_regularizer to None, just to make it equivalent to PyTorch implementation . Didn’t see much difference in terms of behaviour i.e Keras implementation gave me a smoothly decaying loss graph as compared to PyTorch

That’s a good start.

Did you also change the initialization?

Apparently Keras’ Dense layer uses Xavier init for the weights and zeros for the bias, while PyTorch uses [these] init methods.

Model architecture is exactly same, after you pointed out the decoder architecture

parameter initialization also I have kept same

I removed regularization from Keras implementation

optimizer is same Adam.

Could you post your current PyTorch code so that we could also have a look and debug it?

Since yesterday I have made some great progress. It now compares very well with Keras version

Good to hear!

What changed did you make to get similar results?

Very similar results ! So after making change to the model I was still getting the same weird loss graph. Then I realised within an epoch, I was updating the loss with the loss at the end of every batch. So at the end of the epoch the loop was retaining the loss at the end of the last batch of an epoch. So I just retained all the losses during an epoch and then took a mean of those losses as the loss for the epoch.

Thanks a lot man, for being agreat help - I wouldn’t have figured out that decoder layer issue.

1 Like

Now I have the issue of my Reconstruction error (farud vs non Fraud) histograms, ROC and Precision-Recall looking totally different from what I saw in this Keras implementation. My source code is at https://github.com/vimalkansal/credit_card_fraud_detection