I’m trying to set up a dataset to train maskrcnn_resnet50_fpn and calculate loss, iou, dice, etc afterwards for a class project.

I’m new to segmentation, and I’m having trouble setting up dataloader for a dataset with multiple classes (5 total, including the background class). The dataset consists of 5 classes of brain tumor scans, each has a set of base images and mask images, both .tif files.

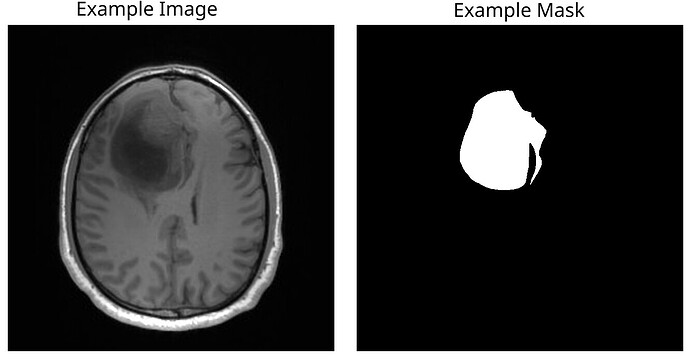

The mask is just a black and white image where the white patch indicates the location/mask of the tumor in the corresponding base image. They are not otherwise annotated.

I’m not sure how to load data where both image and masks are just images, and I’m not sure how to utilize the resulting, multi-class dataloader to train the mask rcnn model.

First, you will have to know the pixel values of each class.

Then you should load the data accordingly with this values, so the model can comprehend the different values of each mask and learn.

I think I have my Dataset file set up correctly BrainTumorDataset, but I there may be an issue with that or my DataLoader since the image and target tensors are currently mismatched at the start of the training loop

RuntimeError: stack expects each tensor to be equal size, but got [512, 512, 3] at entry 0 and [630, 571, 3] at entry 1

dataset and dataloader use in my main file so far

dataset = btd.BrainTumorDataset(

root_dir='/<path>/Brain_Tumor_2D_Dataset',

#transforms=get_transforms

)

data_loader = DataLoader(dataset, batch_size=32, shuffle=True)

num_epochs = 10

for epoch in range(num_epochs):

model.train()

for images, targets in data_loader:

# Loop body

I have a transforms helper, but I kept getting issues when I tried applying it to both image and target in the DataSet file

def get_transforms():

transforms = []

transforms.append(v2.ConvertImageDtype(torch.float))

transforms.append(v2.Resize((100, 100)))

return transforms

Update on the error, at it’s a bit more confusing

RuntimeError: stack expects each tensor to be equal size, but got [512, 512, 3] at entry 0 and [256, 256, 3] at entry 2

Since this model is meant to be multiclass, I have 4 different types of tumor images/masks loaded in, and each of those images is a different size.

In the case of this error, it seems to have grabbed an image for Meningioma (512x512), but grabbed a target for Glioma (256x256)

In the DataSet file, I managed to sort it so the image paths and mask paths where corresponding for each type, but it looks like they’re not matching up in the training loop

for the same idx:

/<path>/Brain_Tumor_2D_Dataset/Meningioma/images/Meningioma_583.tif

/<path>/Brain_Tumor_2D_Dataset/Meningioma/masks/Meningioma_583_mask.tif

label: 4 which corresponds to Meningioma from the dict I set up in the DataSet file

I’m also not sure why channel appears to be at the end of size when it should be first

Well, by the errors you posted the problem is that your images are not in the same size and when you try to stack tensors together into a batch.

About your transforms maybe you should use v2.Compose like this:

from torchvision.transforms import v2

def get_transforms():

transforms = v2.Compose([

v2.Resize((100, 100)),

v2.ConvertImageDtype(torch.float)

])

return transforms

And after, don´t forget to call it when you create your dataset

transforms = get_transforms()

dataset = BrainTumorDataset(

root_dir='/<path>/Brain_Tumor_2D_Dataset',

transforms=transforms

)

So the error I’m seeing is not for the image and target; it’s saying that the different images I’m loading in are not the same size, i.e. the first image is of a Meningioma (512x512) and the next one is of a Glioma (256x256)

After making these changes, the transforms apply to both my image and target, but I’m still getting a size error

RuntimeError: stack expects each tensor to be equal size, but got [512, 100, 100] at entry 0 and [240, 100, 100] at entry 1

It seems like the order of the dimensions is flipped

there should be 3 channels, since I opened the images as RGB in DataSet, and the next two should be dimensions, (100x100) after the transforms are applied

So the shapes for images after transform should be (3, 100, 100)

Honestly

It’s been difficult to find multiclass segmentation tutorials for Mask RCNN where the image and masks are both image files

The PennFudan fine tuning tutorial goes over it with a single class, but I’m not sure how much that can translate to multiple classes