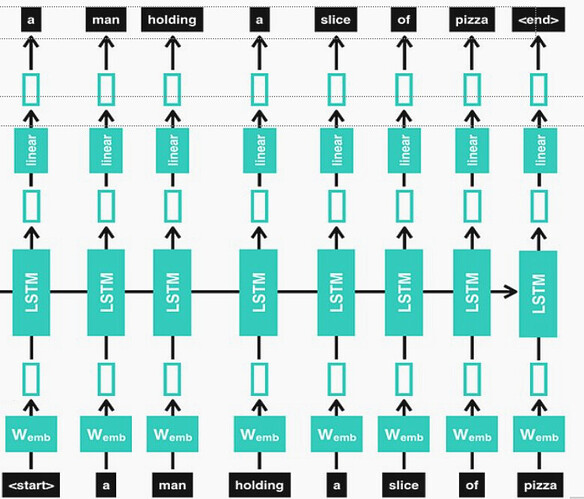

Okay so i a m facing a problem . I am trying to make a recurrent lstm model to predict the next words. My goal is to make the model like given in the photo

Now for that i am using a dataset with 60000 sentences

I have written so far:

class CFG:

debug = False

epochs = 500

learning_rate = 1.0e-3

batch_size = 64

target_cols = ['label'] # target columns

seed = 42

num_layers = 20 # Number of LSTM layers (recurrences)

# Model parameters

vocab_size = None

vocabFound_size = None

embedding_dim = 300

hidden_dim = 128

dropout = 0.25

lstm_dropout_rate = 0.2

num_lstm_layers = 1

num_class = None

device = 'cuda' if torch.cuda.is_available() else 'cpu' # Use GPU if available

def seed_everything(seed=42):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

torch.backends.cudnn.deterministic = True

seed_everything(CFG.seed)

import re

from nltk.corpus import stopwords

from nltk.stem import WordNetLemmatizer

def preprocess_text(text):

# Compile regex patterns

REPLACE_BY_SPACE_RE = re.compile(r'[/(){}\[\]\|@,;]')

SYMBOLS_RE = re.compile(r'[^0-9a-z #+_]')

NUMBERS = re.compile(r'\d+')

STOPWORDS = set(stopwords.words('english')) - {'i'}

lemmatizer = WordNetLemmatizer()

# Clean the text

text = text.lower() # Lowercase

text = REPLACE_BY_SPACE_RE.sub(' ', text) # Remove specific punctuation

text = SYMBOLS_RE.sub('', text) # Remove unnecessary symbols

text = NUMBERS.sub('', text) # Remove numbers

# Tokenize, remove stopwords, and lemmatize

tokens = [word for word in text.split() if word not in STOPWORDS]

tokens = [lemmatizer.lemmatize(token) for token in tokens]

return tokens```

Define the preprocessing function for sentences

def preprocess_sentences(sentences, max_len=20):

processed_sentences =

for sentence in sentences:

tokens = preprocess_text(sentence) # Clean and tokenize the text

if len(tokens) > max_len:

tokens = tokens[:max_len] # Trim to max_len

else:

tokens += [“EOL”] * (max_len - len(tokens)) # Pad with EOL

processed_sentences.append(tokens)

return processed_sentences

train_processed = preprocess_sentences(train[‘text’].tolist())

valid_processed = preprocess_sentences(valid[‘text’].tolist())

test_processed = preprocess_sentences(test[‘text’].tolist())```

def make_vocabulary_from_tokens(tokenized_sentences, min_doc_freq = 3,max_doc_freq = 100):

# Count frequency of each token in dataset

document_freq = {}

for tokenized_sentence in tokenized_sentences:

for token in tokenized_sentence:

document_freq[token] = document_freq.get(token, 0) + 1

# Discard tokens with freq < min_doc_freq

qualified_tokens = {

token: freq for token, freq in document_freq.items() if (min_doc_freq < freq < max_doc_freq)

}

# Add in token_ids for each token

vocab = {token: token_id+2 for token_id, token in enumerate(qualified_tokens.keys())}

# Add special tokens`Preformatted text`

vocab['EOL'] = 0

vocab['[UNK]'] = 1

return vocab, qualified_tokens

vocab, doc_freq = make_vocabulary_from_tokens(train_processed, 0) # use only train set for this

print(f'{len(vocab)=}')```

CFG.vocab_size = len(vocab) # Number of unique tokens in the vocabulary

CFG.embedding_dim = 300 # Dimension of Word2Vec embeddings

pretrained_w2v = KeyedVectors.load_word2vec_format(‘GoogleNews-vectors-negative300.bin’, binary=True)```

embedding_matrix = np.zeros((CFG.vocab_size, CFG.embedding_dim))

# Initialize embeddings for tokens in the vocabulary

for token, idx in vocab.items():

# Skip initializing embeddings for padding tokens or special tokens

if token == 'EOL': # Padding token

embedding_matrix[idx] = np.zeros(CFG.embedding_dim) # Zero vector for padding

elif token == '[UNK]': # Unknown token

embedding_matrix[idx] = np.random.normal(0, 0.1, CFG.embedding_dim) # Random initialization for unknown tokens

else:

# For all other tokens in vocab, use pretrained embeddings if available

if token in pretrained_w2v:

embedding_matrix[idx] = pretrained_w2v[token]

else:

embedding_matrix[idx] = np.random.normal(0, 0.1, CFG.embedding_dim) # Random initialization for out-of-vocab tokens

# Now, your embedding matrix is ready to be used

def tokens_to_ids(tokenized_sentences, vocab):

token_ids = []

for sentence in tokenized_sentences:

ids = [vocab.get(token, vocab['[UNK]']) for token in sentence]

token_ids.append(ids)

return token_ids

train_ids = tokens_to_ids(train_processed, vocab)

valid_ids = tokens_to_ids(valid_processed, vocab)

test_ids = tokens_to_ids(test_processed, vocab)

# Convert to PyTorch tensors

train_tensor = torch.tensor(train_ids, dtype=torch.long)

valid_tensor = torch.tensor(tokens_to_ids(valid_processed, vocab), dtype=torch.long)

test_tensor = torch.tensor(tokens_to_ids(test_processed, vocab), dtype=torch.long)

train_dataset = TensorDataset(train_tensor)

valid_dataset = TensorDataset(valid_tensor)

test_dataset = TensorDataset(test_tensor)

train_loader = DataLoader(train_dataset, batch_size=CFG.batch_size, shuffle=True)

valid_loader = DataLoader(valid_dataset, batch_size=CFG.batch_size)

test_loader = DataLoader(test_dataset, batch_size=CFG.batch_size)

import torch

import torch.nn as nn

import torch.nn.functional as F

class RecurrentLSTM(nn.Module):

def __init__(self, vocab_size, embedding_dim, hidden_dim, pretrained_embeddings):

super(RecurrentLSTM, self).__init__()

self.embedding = nn.Embedding(vocab_size, embedding_dim)

self.embedding.weight = nn.Parameter(torch.tensor(pretrained_embeddings, dtype=torch.float32))

self.embedding.weight.requires_grad = True

self.lstm = nn.LSTM(embedding_dim, hidden_dim, batch_first=True)

self.fc = nn.Linear(hidden_dim, vocab_size)

def forward(self, inputs, hidden=None):

embedded = self.embedding(inputs) # Shape: (batch_size, seq_length, embedding_dim)

batch_size, seq_length, _ = embedded.shape

outputs = []

for t in range(seq_length):

inp = embedded[:, t, :].unsqueeze(1) # Shape: (batch_size, 1, embedding_dim)

out, hidden = self.lstm(inp, hidden) # out: (batch_size, 1, hidden_dim)

next_word_logits = self.fc(out.squeeze(1)) # Shape: (batch_size, vocab_size)

outputs.append(next_word_logits)

outputs = torch.stack(outputs, dim=1) # Shape: (batch_size, seq_length, vocab_size)

return outputs, hidden

def predict_sentence(model, sentence, vocab, max_len=20):

"""

Predicts the next 20 words for a given input sentence using the model.

Args:

model: Trained LSTM model.

sentence: Input sentence (str).

vocab: Vocabulary mapping (token to index).

max_len: Fixed length for both input and output sequences.

Returns:

Predicted sentence (str) of exactly 20 words.

"""

model.eval() # Set the model to evaluation mode

# Preprocess the input sentence

tokens = preprocess_text(sentence)[:max_len] # Trim to max_len

tokens += ["EOL"] * (max_len - len(tokens)) # Pad with "EOL" to max_len

# Convert tokens to IDs

input_ids = torch.tensor([[vocab.get(token, vocab['[UNK]']) for token in tokens]], device=CFG.device)

predictions = [] # List to store predicted tokens

hidden = None # Initialize LSTM hidden states

# Generate 20 output words

for _ in range(max_len):

# Forward pass through the model

outputs, hidden = model(input_ids, hidden)

next_word_logits = outputs[:, -1, :] # Get logits for the last predicted word

predicted_id = next_word_logits.argmax(1).item() # Get the most probable word ID

predictions.append(predicted_id) # Save the predicted word ID

# Prepare input for the next prediction step

input_ids = torch.tensor([[predicted_id]], device=CFG.device)

# Convert predicted IDs back to tokens

predicted_tokens = [list(vocab.keys())[list(vocab.values()).index(idx)] for idx in predictions]

# Return the joined predicted tokens as a single sentence

return " ".join(predicted_tokens)

from sklearn.metrics import accuracy_score

# Move the embedding matrix to the device

embedding_matrix_tensor = torch.tensor(embedding_matrix, dtype=torch.float32).to(CFG.device)

# Define the model and set its embedding weights

model = RecurrentLSTM(CFG.vocab_size, CFG.embedding_dim, CFG.hidden_dim, pretrained_embeddings=embedding_matrix).to(CFG.device)

model.embedding.weight.data.copy_(embedding_matrix_tensor)

model.embedding.weight.requires_grad = True # Allow fine-tuning of embeddings

# Define optimizer and loss function

criterion = nn.CrossEntropyLoss(ignore_index=vocab['EOL']) # Ignore padding in loss calculation

optimizer = optim.Adam(model.parameters(), lr=CFG.learning_rate)```

for epoch in range(CFG.epochs):

model.train()

total_loss = 0

num_batches = 0

for batch in train_loader:

inputs = batch[0].to(CFG.device)

targets = inputs[:, 1:]

inputs = inputs[:, :-1]

optimizer.zero_grad()

outputs, _ = model(inputs)

outputs = outputs.reshape(-1, CFG.vocab_size)

targets = targets.reshape(-1)

loss = criterion(outputs, targets)

loss.backward()

# Gradient clipping

torch.nn.utils.clip_grad_norm_(model.parameters(), max_norm=5)

optimizer.step()

total_loss += loss.item()

num_batches += 1

avg_train_loss = total_loss / num_batches

print(f"Epoch {epoch + 1}: Average Training Loss: {avg_train_loss:.4f}")

# Validation

model.eval()

all_targets, all_predictions = [], []

with torch.no_grad():

for batch in valid_loader:

inputs = batch[0].to(CFG.device)

targets = inputs[:, 1:]

inputs = inputs[:, :-1]

outputs, _ = model(inputs)

predictions = outputs.argmax(dim=-1)

# Filter `[PAD]`, `[UNK]`, `EOL`

mask = (targets != vocab['[UNK]']) & (targets != vocab['EOL'])

filtered_targets = targets[mask]

filtered_predictions = predictions[mask]

all_targets.extend(filtered_targets.cpu().numpy())

all_predictions.extend(filtered_predictions.cpu().numpy())

val_accuracy = accuracy_score(all_targets, all_predictions)

print(f"Epoch {epoch + 1}: Validation Accuracy: {val_accuracy:.4f}")

# Example prediction

if epoch == 0 or (epoch + 1) % 5 == 0:

example_sentence = "A"

predicted_sentence = predict_sentence(model, example_sentence, vocab)

print(f"Example Prediction after Epoch {epoch + 1}:")

print(f"Input Sentence: {example_sentence}")

print(f"Predicted Sentence: {predicted_sentence}")

But is Giving very bad result like

Input Sentence: A

Predicted Sentence: recover [UNK] [UNK] [UNK] surprise set gift real [UNK] strongly daily basis big drug [UNK] chance told [UNK] [UNK] [UNK]

Epoch 496: Average Training Loss: 1.0183

Epoch 496: Validation Accuracy: 0.0039

Epoch 497: Average Training Loss: 1.0294

Epoch 497: Validation Accuracy: 0.0043

Epoch 498: Average Training Loss: 1.0322

Epoch 498: Validation Accuracy: 0.0045

Epoch 499: Average Training Loss: 1.0224

Epoch 499: Validation Accuracy: 0.0036

Epoch 500: Average Training Loss: 1.0140

Epoch 500: Validation Accuracy: 0.0040

Example Prediction after Epoch 500:

Input Sentence: A

Predicted Sentence: given daily accumulation [UNK] surprise across top tool real [UNK] shut [UNK] brought stealing hand gentle hand chaos [UNK] [UNK]