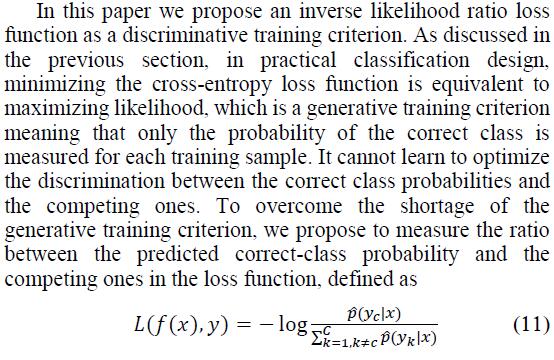

Thanks a lot first!There is a Loss Function

I write some codes

# -*- coding: utf-8 -*-

import math

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

def one_hot(index, classes):

index=index.cpu()

size = index.size() + (classes,)

view = index.size() + (1,)

mask = torch.Tensor(*size).fill_(0)

index = index.view(*view)

ones = 1.

if isinstance(index, Variable):

ones = Variable(torch.Tensor(index.size()).fill_(1))

mask = Variable(mask, volatile=index.volatile)

return mask.scatter_(1, index, ones)

class focal_revised_ce(nn.Module):

def __init__(self, eps=1e-7):

super(focal_revised_ce, self).__init__()

self.eps = eps

return

def forward(self, input, target):

y = one_hot(target, input.size(-1))

Psoft = torch.nn.functional.softmax(input).cpu()

Loss=0.0

for i in range(0,target.size(0)-1):

Loss+=torch.log((torch.sum(y[i,]*Psoft[i,])/(1.0-torch.sum(y[i,]*Psoft[i,]))))

Loss=Loss/(target.size(0))

return Loss

then sometimes loss is inf.What happened?Thanks a lot!!!