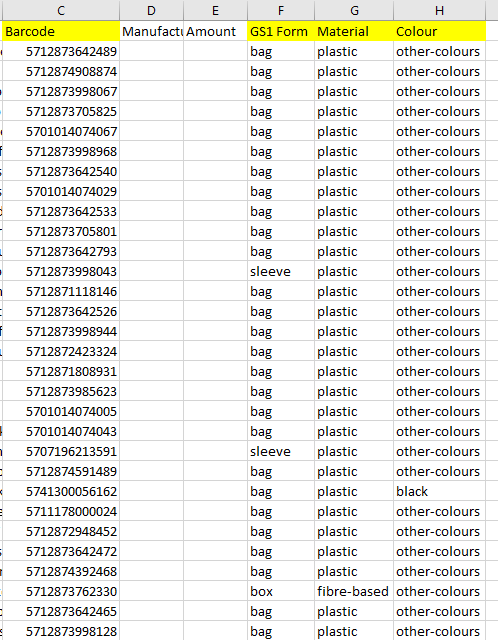

Hello everybody. I got data stored in a csv file and the given barcodes and the pictures are labelled with the barcodes. The csv file also contains three columns with categories where the product barcode is labelled with the three categories. I’ve got a picture of the csv for better understanding.

The script runs right now but the loss function is getting more and more negative. And I’m not sure if the approach is correct, I built also my own dataset loader but ended up using the pytorch dataloader.

I would appreciate it if you can give me some tips and advice

Greetings Jonas

import os

abspath = os.path.abspath(__file__)

print(abspath)

import torch

print(f'Do I have GPU? : {torch.cuda.is_available()}') # if True you have gpu which can be used, otherwise not!

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

import os

import torch

import torchvision

import torchvision.transforms as transforms

from PIL import Image

abspath = os.path.abspath(__file__)

print(abspath)

print(os.getcwd())

os.chdir('C:/Users/recykla')

print(os.getcwd())

img_dir = "images/train/"

csv_file = "products.csv"

products = pd.read_csv("products.csv")

# label list

classes = np.unique(products['GS1 Form'])

# Define relevant variables for the ML task

batch_size = 64

num_classes = len(classes)

learning_rate = 0.01

num_epochs = 10

# normalizing data ...

transform = transforms.Compose([transforms.Resize((32,32)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.4914, 0.4822, 0.4465],

std=[0.2023, 0.1994, 0.2010])

])

# Device will determine whether to run the training on GPU or CPU.

# device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

"""Loading data with PyTorch"""

from torch.utils.data import DataLoader, Dataset

from torchvision.datasets import ImageFolder

import random

import os

def one_hot_encode(labels, num_classes):

one_hot = np.zeros((len(labels), num_classes))

one_hot[np.arange(len(labels)), labels] = 1

return one_hot

class MultiLabelDataset(Dataset):

def __init__(self, img_dir, csv_file, transform=None):

self.dataframe = pd.read_csv(csv_file)

self.img_dir = img_dir

self.img_paths = [str(barcode) + '.jpg' for barcode in self.dataframe['Barcode'].values]

self.labels = {'GS1 Form':self.dataframe['GS1 Form'].values, 'Material':self.dataframe['Material'].values, 'Colour':self.dataframe['Colour'].values}

# dictionary for each class

self.gs1_form = { val:i for i, val in enumerate(list(set(self.labels['GS1 Form'])))}

self.material = { val:i for i, val in enumerate(list(set(self.labels['Material'])))}

self.colour = { val:i for i, val in enumerate(list(set(self.labels['Colour'])))}

self.transform = transform

def __len__(self):

return len(self.img_paths)

def __getitem__(self, idx):

img_path = os.path.join(self.img_dir, self.img_paths[idx])

img = Image.open(img_path).convert('RGB')

label1 = self.gs1_form[self.labels['GS1 Form'][idx]]

label2 = self.material[self.labels['Material'][idx]]

label3 = self.colour[self.labels['Colour'][idx]]

#

label = torch.tensor([label1, label2, label3])

num_classes = len(self.gs1_form) + len(self.material) + len(self.colour)

label = torch.nn.functional.one_hot(label, num_classes = num_classes)

if self.transform:

img = self.transform(img)

return img, label

class MultiLabelDataLoader(DataLoader):

def __init__(self, dataset, batch_size=batch_size, shuffle=False, num_workers=0, pin_memory = False):

super(MultiLabelDataLoader, self).__init__(dataset, batch_size=batch_size, shuffle=shuffle, num_workers=num_workers, pin_memory=pin_memory)

def __iter__(self):

for i, (data, label) in enumerate(super().__iter__()):

yield (data, label)

def __len__(self):

return len(self.dataset)

# Create an instance of the custom dataset class

train_dataset = MultiLabelDataset(img_dir, csv_file, transform=transform)

print(train_dataset[1])

# Create an instance of the custom data loader class

train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True, pin_memory = False)

data_iter = iter(train_loader)

images, labels = next(data_iter)

print(labels)

len(train_loader)

import torch

import torch.nn as nn

import torch.optim as optim

import torch.nn.functional as F

from tqdm import tqdm

# Define the CNN architecture

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 32, 3)

self.conv2 = nn.Conv2d(32, 64, 3)

self.fc1 = nn.Linear(50176, 128)

self.fc2 = nn.Linear(128, 21)

def forward(self, x):

x = self.conv1(x)

x = F.relu(x)

x = self.conv2(x)

x = F.relu(x)

x = x.view(x.size(0), -1)

x = self.fc1(x)

x = F.relu(x)

x = self.fc2(x)

return x

# Initialize the CNN

print(f'Do I have GPU? : {torch.cuda.is_available()}') # if True you have gpu which can be used, otherwise not!

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

net = Net()

# Define the loss function and optimizer

criterion = nn.MultiLabelSoftMarginLoss()

optimizer = optim.Adamax(net.parameters(), lr=learning_rate)

# data_iter = iter(train_loader)

# images, labels = next(data_iter)

# Train the CNN

for epoch in range(num_epochs):

running_loss = 0

print(running_loss)

for i, (images, labels) in enumerate(tqdm(train_loader, desc=(f'Epoch {epoch + 1}'))):

inputs, labels = images, labels

labels = torch.argmax(labels, dim=1) # Extract class index from one-hot encoded label tensor

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

print('Epoch %d loss: %.3f ' % (epoch + 1, running_loss / len(train_loader)))

print('Finished Training')