As the title says, I have been going crazy over my simple network not working well and I finally managed to identify the culprit: the num_workers in my DataLoader.

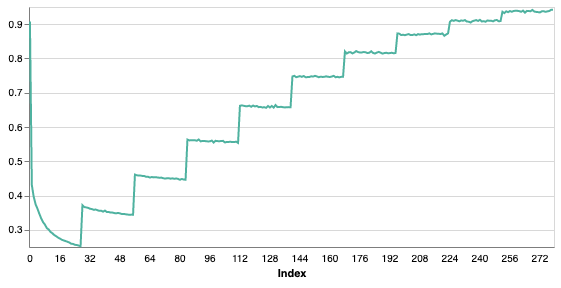

A bit more details: it’s a simple fully connected network that takes 1d inputs and regresses a set of 1d outputs, trained with an MSE loss. In my initial experiments, copying code from my other networks, I used num_workers=4, trained and got decent results. Now one week later I tried replicating those results but setting num_workers=0 since my dataset fits all in RAM and there’s no real need for multiple workers since there is no intensive loading/data augmentation for now. After that change, training didn’t work anymore, the loss behaved like this (edit: every spike in the plot is a new epoch):

while if I set num_workers to 0 the MSE loss behaves much more like you would expect (going down and converging to a low value, can’t attach a second screenshot as a new account).

Furthermore, the average error on my test set after 10 epochs is ~17 (high) for the training with num_workers=0, and ~8 (what I would expect) for num_workers=4. I ran multiple trainings with the same exact hyperparameters, including numpy and torch random seeds, with the only change being the num_workers and results are consistent across runs.

The only thing I can think of right now is some kind of bug somewhere in pytorch, do you guys have any clue about what’s going on? Thank you!

Could you post an executable code snippet so that we could try to reproduce it?