Greetings,

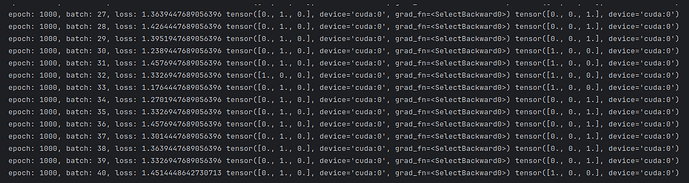

I have made a neural network for a classification problem (horse health classification) from kaggle, But the model is barely learning anything. I have tried setting the epoch to 20k but still almost 50-60% answers are predicted wrong by the model. I have preprocessed the data and used over sampling, my sklearn models did great in the predictions.

here is my code Neural network,

import torch.nn as nn

class NNModel(nn.Module):

def __init__(self, inp_size, out_size, hidden):

super(NNModel, self).__init__()

self.input_layer = nn.Linear(in_features=inp_size, out_features=hidden)

self.act1 = nn.LeakyReLU()

self.hidden_layer = nn.Linear(in_features=hidden, out_features=hidden)

self.act2 = nn.LeakyReLU()

self.output_layer = nn.Linear(in_features=hidden, out_features=out_size)

self.dropout = nn.Dropout(0.1)

self.softmax = nn.Softmax()

def forward(self, input_tensor):

x = self.input_layer(input_tensor)

x = self.act1(x)

x = self.hidden_layer(x)

x = self.act2(x)

x = self.output_layer(x)

x = self.dropout(x)

x = self.softmax(x)

return x

here is the loss function and optimizer,

model = NNModel(train_2.shape[1]-1, 3, 512).to('cuda')

optim = torch.optim.Adagrad(model.parameters(), lr=0.01)

criterion = nn.CrossEntropyLoss()

here is the training function,

def trainning(dataLoader, epochs, optimizer, criterion):

model.train()

loss_h = []

for i in range(epochs):

for batch, (X, y) in enumerate(dataLoader):

y_hat = model(X)

loss = criterion(y_hat, y)

loss_h.append(loss.item())

loss.backward()

optimizer.step()

optimizer.zero_grad()

if i%100 == 0:

print(f'epoch: {i}, batch: {batch}, loss: {loss}', y_hat[0], y[0]

Can anyone please help me out a little? I am very new to pytorch.