Im training a RAVE model and having some questions.

Its my first time using the library and ml training ever, so forgive my ignorance.

Got a colab pro plan just to avoid disconnections but still getting quite some.

Googling around about batch size & num_workers yield few results, but still I dont get it fully.

-

- The first warning Im worried about is this one. Apparently colab complains about the number of workers. Note that Im running the python script via !python train_rave.py:

UserWarning: This DataLoader will create 8 worker processes in total. Our suggested max number of worker in current system is 2, which is smaller than what this DataLoader is going to create. Please be aware that excessive worker creation might get DataLoader running slow or even freeze, lower the worker number to avoid potential slowness/freeze if necessary.

-

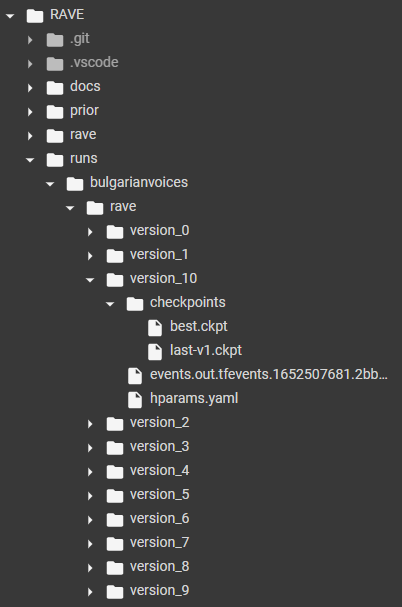

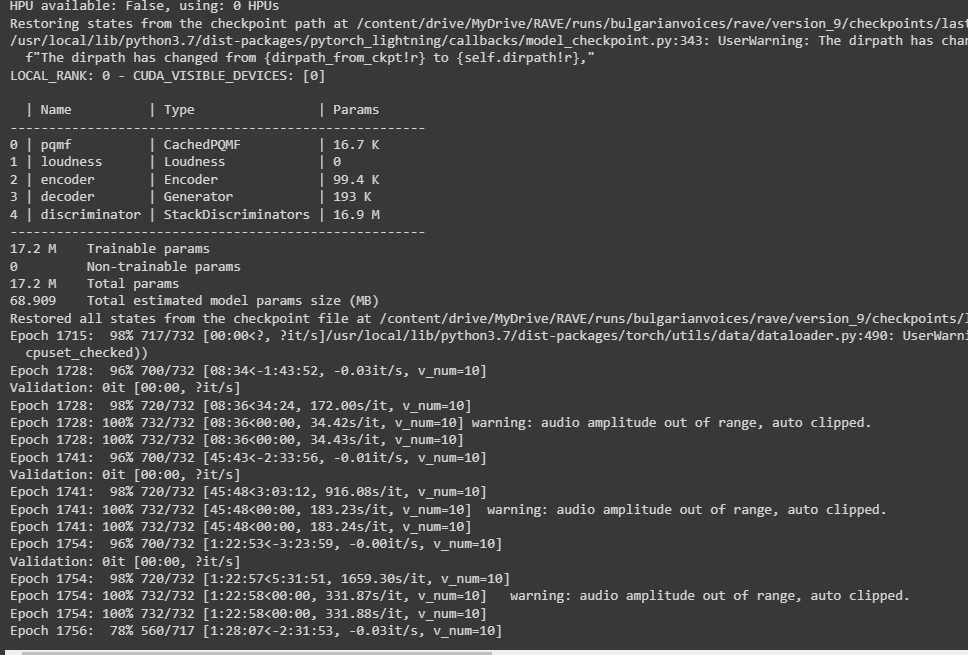

- Something odd is happening with my ckpt’s. They seem to load fine but I get the following warning once I resume a training after a disconnect:

model_checkpoint.py:343: UserWarning: The dirpath has changed from 'runs/bulgarianvoices/rave/version_0/checkpoints' to 'runs/bulgarianvoices/rave/version_1/checkpoints', therefore `best_model_score`, `kth_best_model_path`, `kth_value`, `last_model_path` and `best_k_models` won't be reloaded. Only `best_model_path` will be reloaded.

f"The dirpath has changed from {dirpath_from_ckpt!r} to {self.dirpath!r},

Just want to check with experienced users if this is just normal or something I should worry about. RAVE trains on audio with 8 Batches and 8 workers.

Regarding google drive I also have some doubts.

-

- Are previous ckpt’s at all necessary ?

could I just remove previous runs and just keep the last.ckpt and corresponding run ? My google drive is filling up quite fast otherwise

- Are previous ckpt’s at all necessary ?

After training for a while I also get a “not available” message on the connect colab button , where normally displays RAM and DISK usage. The script still keeps working but is just interesting to me, with a pro account still don’t have much reliability on that sense.

I would like to still use gdrive to keep the ckpt’s , otherwise on every disconnect I would loose all the work. But I guess is slowing the process to have all running from a gdrive instance.

Any tips or references to docs in this realms will be very appretiated. I dont have experience with torch but Im confortable with python/system etc.

This is the project I run in colab https://github.com/acids-ircam/RAVE

tqdm==4.62.3

effortless-config==0.7.0

einops==0.4.0

librosa==0.8.1

matplotlib==3.5.1

numpy==1.20.3

pytorch-lightning==1.6.1

scikit_learn==1.0.2

scipy==1.7.3

soundfile==0.10.3.post1

termcolor==1.1.0

torch==1.11.0

tensorboard==2.8.0

GPUtil==1.4.0

git+https://github.com/caillonantoine/cached_conv.git@v2.3.5#egg=cached_conv

git+https://github.com/caillonantoine/UDLS.git#egg=udls

Its really hard to see the effects on changes made to the code since the waiting period is really long , so I would love to optimize it with colab specific practices. Thinking on running the code in cells instead of from !python