Hi

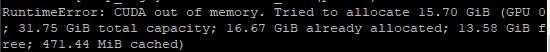

Im dealing with memory issue,

which is because I need to use huge size of nn.embedding layer.

Ex) self.layer = nn.embedding(huge_dimension, emb_dim)

Before start,

I have 2 gpus, and both have vram memory of 32GB.

My embedding layer(my model) 's memory usage is 17~18GB.

Im using Adam optimizer.

Okay uhh…

It seemed to be okay for just using one 32GB gpu to handle 17~18GB size model, but

Im facing Cuda out of memory when my Adam optimzer begins loss.backward().

So… I’ve tried

- nn.embedding(~~, sparse = True) with SparseAdam

- nn.DataParallel

The first one,

because the cause of Cuda Out of memory is loss.backward(),

which is the step of backpropagation loading the gradient-graph before optimizer.step(),

I thought using sparse embedding layer with SparseAdam could help because

I heard SparseAdam only need the “used part” of total embedding layer(sparse matrix).

In fact, it worked about the loss.backward(), but now optimizer.step() is showing me Cuda out of memory.

The Second one, nn.DataParallel,

because I have one more GPU with same spec,

I thought I could deal with this problem using nn.DataParallel.

but the problem is,

when nn.DataParallel calls nn.parallel.replicate(), which copies model to each GPUs,

it shows Cuda out of memory.

I’m not sure about how nn.parallel.replicate works but It seems it need extra memory to copy

my 17~18GB model.

So…can anyone please tell me

how to deal with huge size of embedding layer or…

why my optimizer.step()is showing me Cuda out of memory or…

why nn.parallel.replicate needs extra memory or…

nn.parallel.replicate seems to copy model from gpu to gpu, but i think just copying model from cpu to each gpu seems fair enough… but i don’t know the way. or…

how to seperate my nn.embedding layer to 2 gpus or…

Can I just use one gpu for forward and another gpu for backward?

or just any comments for help

thanks