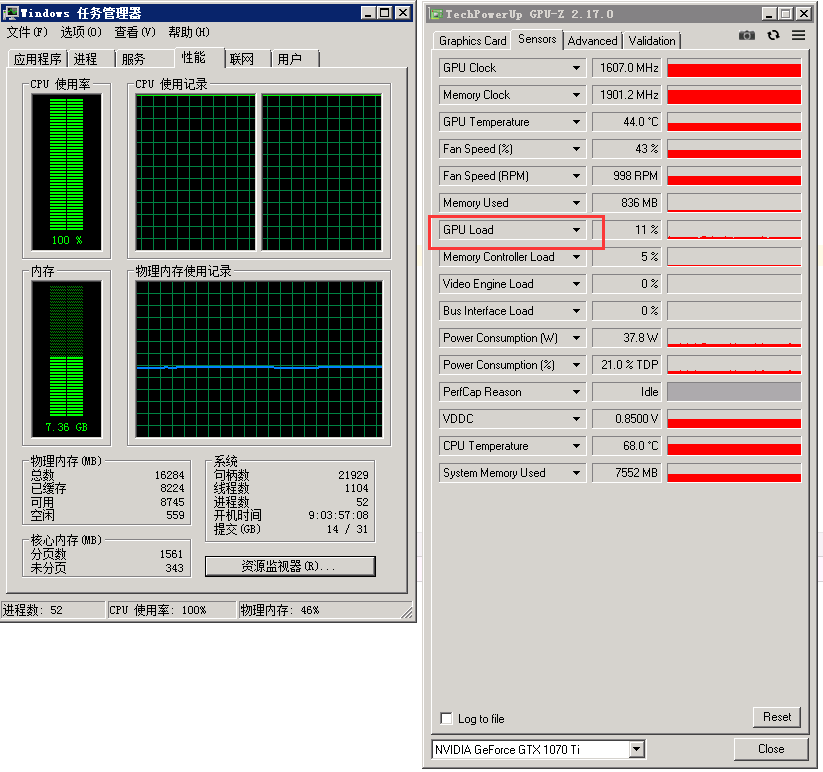

It seems nn.functional.interpolate is working on CPU instead of GPU.

The CPU occupancy is 100%, however the GPU occupancy is merely 11%.

Here are the parts my model forward codes, I wrote the nn.functional.interpolate into it.

Can the nn.functional.interpolate function be ran by cuda?

def forward(self, x):

x = nn.functional.interpolate(x, scale_factor=self.scale, mode='bicubic',align_corners=True)

return x

Did somebody also encounter this problem?

Did somebody also encounter this problem?

I cannot reproduce this issue.

Are you sure F.interpolate is using the CPUs?

This code snippet utilizes the GPU 100% and a single CPU code, which seems to be fine:

import torch

import torch.nn as nn

import torch.nn.functional as F

import time

class MyModel(nn.Module):

def __init__(self, scale_factor=2):

super(MyModel, self).__init__()

self.scale_factor = scale_factor

def forward(self, x):

x = F.interpolate(x, scale_factor=self.scale_factor, mode='bicubic',align_corners=True)

return x

model = MyModel()

b, c, h, w = 64, 3, 128, 128

x = torch.randn(b, c, h, w, device='cuda')

nb_iters = 100000

torch.cuda.synchronize()

t0 = time.perf_counter()

for _ in range(nb_iters):

output = model(x)

torch.cuda.synchronize()

t1 = time.perf_counter()

print('Took {}s, {}s per iter'.format((t1 - t0), (t1 - t0)/nb_iters))

Could you try this code?

It was all my fault.

It was all my fault.