example

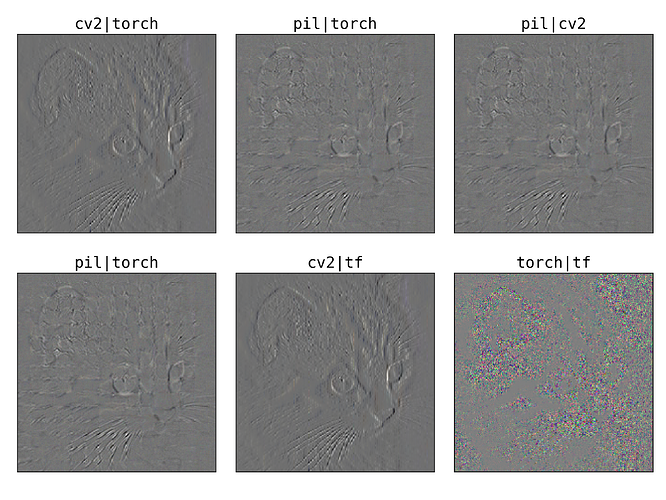

differences appear minor, but they change benchmarking results

import io

import requests

import numpy as np

import PIL

import matplotlib.pyplot as plt

import torch

from torch.nn.functional import interpolate

def test_dif(dtype="float64", mode="bilinear", show=False):

sig = lambda x, msg="": print("%s\tmin: %.4f, max: %.4f mean: %.4f, std: %.4f \tshape: %s, dtype: %s"%(msg, x.min(), x.max(), x.mean(), x.std(), str(tuple(x.shape)), str(x.dtype)))

url = ("https://ichef.bbci.co.uk/news/976/cpsprodpb/10207/production/_116155066_campercats.png")

pimg = PIL.Image.open(io.BytesIO(requests.get(url).content))

tensor = torch.from_numpy((np.array(pimg)/255).astype(dtype)).permute(2, 0, 1).contiguous()

tensor = tensor.view(1, *tensor.shape)

print(dtype, mode, "Resize test vs interpolate, ", pimg.size)

new_size = [512, (512*pimg.size[0])//pimg.size[1]]

resample = {"bilinear":PIL.Image.BILINEAR, "bicubic":PIL.Image.BICUBIC}

pimg_sz = (np.array(pimg.resize(size=new_size[::-1], resample=resample[mode]))/255).astype(dtype)

ptensor = interpolate(tensor, size=new_size, mode=mode, align_corners=False)

sig(ptensor, msg=" interpolate() ")

sig(pimg_sz, msg=" Image.resize()")

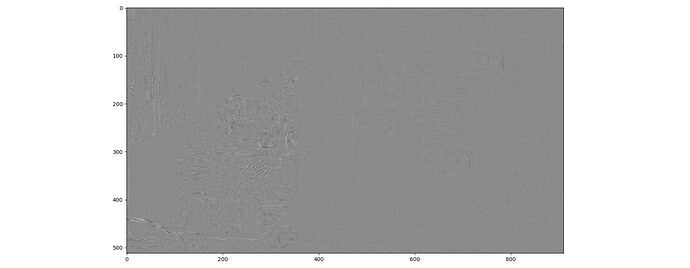

if show:

ntensor = ptensor[0].numpy().transpose(1, 2, 0)

diff = ntensor - pimg_sz

diff = (diff - diff.min())/(diff.max() - diff.min())

plt.figure(figsize=(18, 7))

# plt.subplot(131)

# plt.imshow(pimg_sz)

# plt.subplot(132)

# plt.imshow(ntensor)

# plt.subplot(133)

plt.imshow(diff)

plt.tight_layout()

plt.show()

if __name__ == "__main__":

test_dif("float64", "bicubic")

test_dif("float32", "bicubic")

test_dif("float64", "bilinear")

test_dif("float32", "bilinear", show=True)

Result

float64 bicubic Resize test vs interpolate, (976, 549)

interpolate() min: -0.0877, max: 1.0536 mean: 0.3292, std: 0.2270 shape: (1, 3, 512, 910), dtype: torch.float64

Image.resize() min: 0.0000, max: 1.0000 mean: 0.3292, std: 0.2266 shape: (512, 910, 3), dtype: float64

float32 bicubic Resize test vs interpolate, (976, 549)

interpolate() min: -0.0877, max: 1.0536 mean: 0.3292, std: 0.2270 shape: (1, 3, 512, 910), dtype: torch.float32

Image.resize() min: 0.0000, max: 1.0000 mean: 0.3292, std: 0.2266 shape: (512, 910, 3), dtype: float32

float64 bilinear Resize test vs interpolate, (976, 549)

interpolate() min: 0.0000, max: 1.0000 mean: 0.3292, std: 0.2261 shape: (1, 3, 512, 910), dtype: torch.float64

Image.resize() min: 0.0000, max: 1.0000 mean: 0.3292, std: 0.2260 shape: (512, 910, 3), dtype: float64

float32 bilinear Resize test vs interpolate, (976, 549)

interpolate() min: 0.0000, max: 1.0000 mean: 0.3292, std: 0.2261 shape: (1, 3, 512, 910), dtype: torch.float32

Image.resize() min: 0.0000, max: 1.0000 mean: 0.3292, std: 0.2260 shape: (512, 910, 3), dtype: float32