Hi everyone!

I am wondering, why these outputs are different…

my_data = torch.tensor([1,2,3], dtype=torch.float32).repeat(1000, 1)

weights = torch.rand((3,2))

out = torch.matmul(my_data, weights)

print(out)

linear = nn.Linear(3, 2)

output_linear = linear(my_data)

print(output_linear)

out is not close to output_linear…

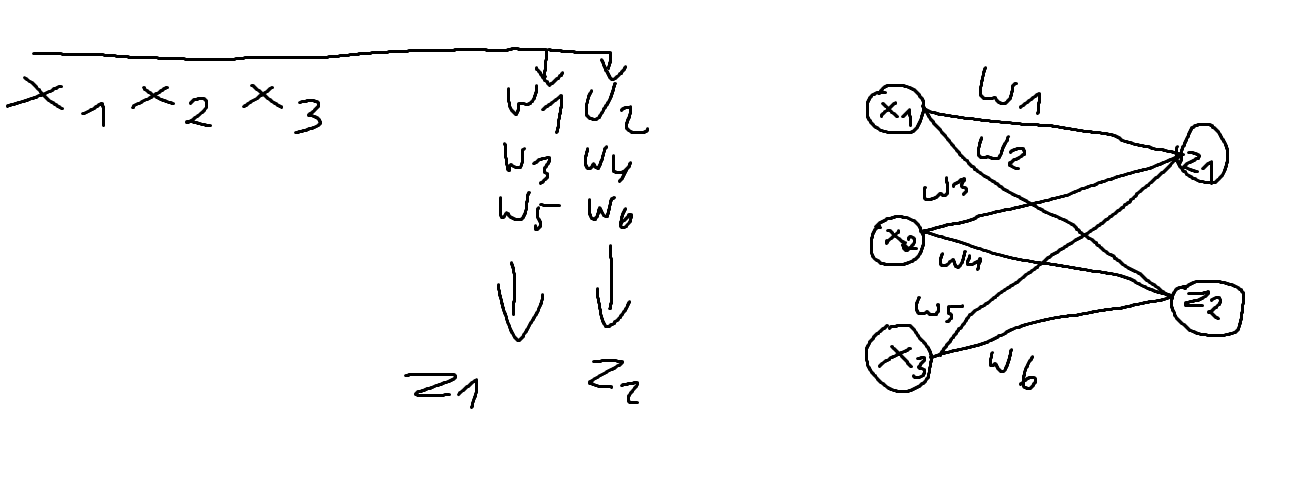

I would like to recreate following schema and I think my torch.matmul multiplies it correctly… but dont know why nn.Linear gives my different results just…

1 Like

obv. you have to compare the module with something like matmul(my_data, linear.weights.T) + linear.bias

1 Like

hmmm,

out = torch.matmul(my_data, weights) + 1.

linear = nn.Linear(3, 2)

this way?

this way @Damian_Andrysiak

>>> linear = torch.nn.Linear(3, 3)

>>> inputs = torch.rand(3, 3)

>>>

>>> linear(inputs)

tensor([[-0.4848, 0.4174, -0.1633],

[-0.4428, 0.5404, -0.1470],

[-0.7272, 0.0770, 0.0321]], grad_fn=<AddmmBackward0>)

>>> torch.mm(inputs, linear.weight.T).add(linear.bias)

tensor([[-0.4848, 0.4174, -0.1633],

[-0.4428, 0.5404, -0.1470],

[-0.7272, 0.0770, 0.0321]], grad_fn=<AddBackward0>)

>>>

You were using weights and 1 instead of the layer parameters.

1 Like

ok, works for me. Could you tell me please, does I do it right way? I mean about multiplication weights like on my paint:

output = torch.matmul(x, self.weights_ih) + self.bias_ih

where:

self.weights_ih = torch.rand((input_features, input_features), device=device)

self.bias_ih = torch.rand((input_features), device=device)