Hi,

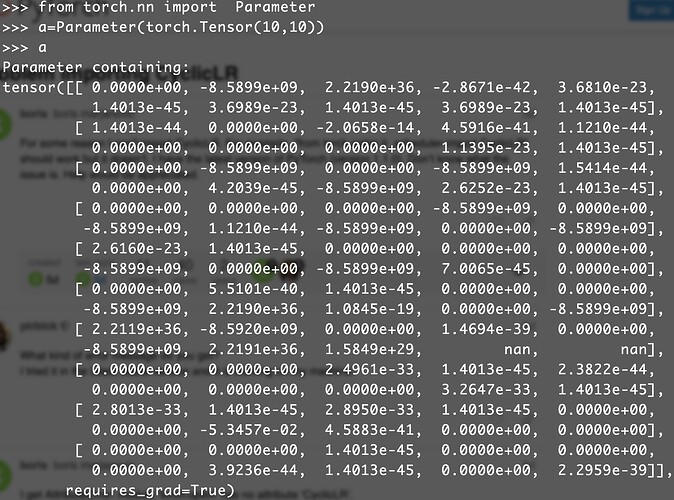

When I define a Parameter like this:

from torch.nn import Parameter

a=Parameter(torch.Tensor(10,10))

I found the parameter contains nan.

I am wondering does Parameter have to do initialization manually to avoid getting nan? Or is my way of defining Parameter wrong?

1 Like

nutszebra

(Ikki Kishida)

2

NaN comes from torch.Tensor, not from Parameter.

This topic is relevent and helpful for you: Nan in torch.Tensor.

If you would like to initialize with random value, then you can use torch.rand instead of torch.Tensor.

Thank you! That’s what I want!