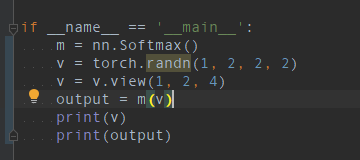

could anyone help me to explain this result? and how can I obtain the correct one?

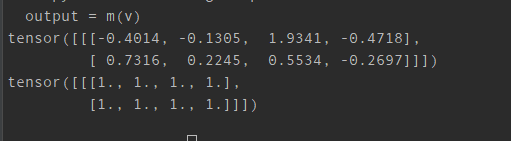

As shown in the following experiment, the default dim is 0 for the tensor view while the default dim is 1 for the original tensor. A discussion about dim at Stack Overflow might help you.

>>> import torch

>>> import torch.nn.functional as F

>>> v = torch.randn(1, 2, 2, 2)

>>> F.softmax(v)

__main__:1: UserWarning: Implicit dimension choice for softmax has been deprecated. Change the call to include dim=X as an argument.

tensor([[[[0.1006, 0.1361],

[0.2072, 0.8304]],

[[0.8994, 0.8639],

[0.7928, 0.1696]]]])

>>> F.softmax(v, dim=0)

tensor([[[[1., 1.],

[1., 1.]],

[[1., 1.],

[1., 1.]]]])

>>> F.softmax(v, dim=1)

tensor([[[[0.1006, 0.1361],

[0.2072, 0.8304]],

[[0.8994, 0.8639],

[0.7928, 0.1696]]]])

>>> F.softmax(v, dim=2)

tensor([[[[0.0313, 0.2495],

[0.9687, 0.7505]],

[[0.0701, 0.9117],

[0.9299, 0.0883]]]])

>>> F.softmax(v, dim=3)

tensor([[[[0.0993, 0.9007],

[0.5318, 0.4682]],

[[0.1345, 0.8655],

[0.9551, 0.0449]]]])

>>> w = v.view(1,2,4)

>>> F.softmax(w)

tensor([[[1., 1., 1., 1.],

[1., 1., 1., 1.]]])

>>> F.softmax(w, dim=0)

tensor([[[1., 1., 1., 1.],

[1., 1., 1., 1.]]])

>>> F.softmax(w, dim=1)

tensor([[[0.1006, 0.1361, 0.2072, 0.8304],

[0.8994, 0.8639, 0.7928, 0.1696]]])

>>> F.softmax(w, dim=2)

tensor([[[0.0146, 0.1327, 0.4535, 0.3992],

[0.0469, 0.3019, 0.6220, 0.0292]]])