I was trying to use autograd.grad, but torch said that there is no such module. I didn’t found this module in source.(there only backward module )But I saw other people use this function. How can I fix it?

This is only available on master right now and not on the releases (before the feature is very recent).

If you want to use it right now, you will need to install from source.

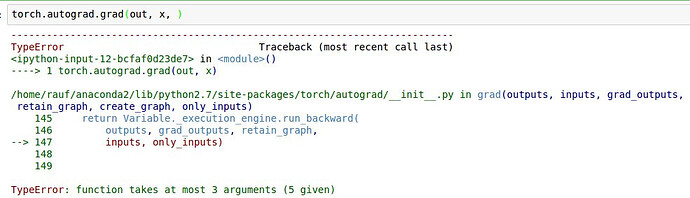

Thanks for your reply. I have installed it from source, but there is usage of run_backward with 5 argument, when this function takes only 3 args.(def run_backward(self, variable, grad, retain_variables) ) Do you know where can I take source of changed run_backward?

Hi albanD, is this function available on the releases now?

No, there are no new realeases yet. You can check here https://github.com/pytorch/pytorch/releases