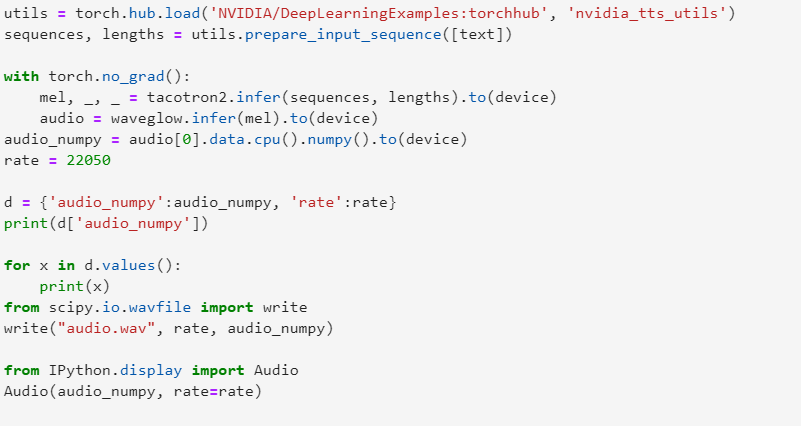

I am trying to get inferencing results of my trained Text-to-Speech Tacotron2 model on CPU instead of GPU. The model is successfully mapped on CPU. However while inferencing I have changed the cuda tensors to CPU but still I am facing this error.

Please help me resolve this issue. Thanks!!

@ptrblck please acknowledge

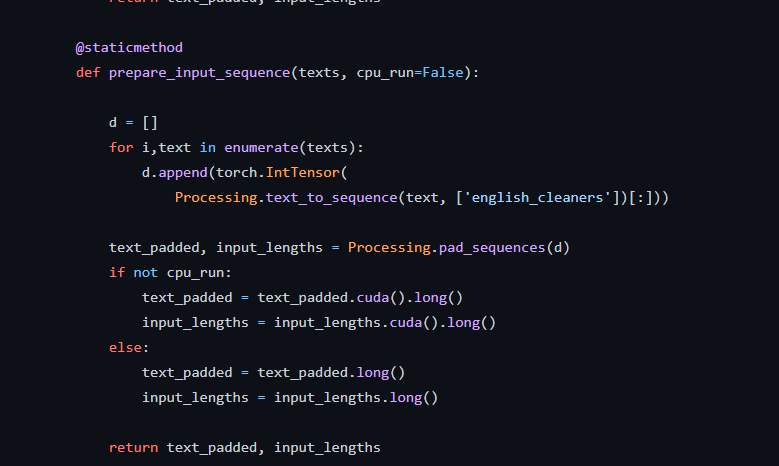

Based on the error message by guess is that this example/model/util might use a default GPU dependency. You could check, if the prepare_input_sequence method accepts a device argument or else you might want to check the Tacotron2 source code and see where and how cpu_run can be defined.

Here is the screen shot of that file where the cpu_run is defined. I have accessed it through the source code on GitHub, but I cannot access it iin my sagemaker notebook. I need to make changes in the above code provided only. Please suggest if there is any possibility to overcome this.

The cpu_run is an argument of prepare_input_sequence, so set it to True in your main script and try to rerun your code.