Hi, I’m trying to run a project within a conda env. I have a rtx 3070ti installed in my machine and it seems that the initialization function is causing issues in the program.

Error:

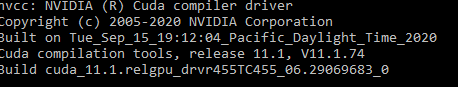

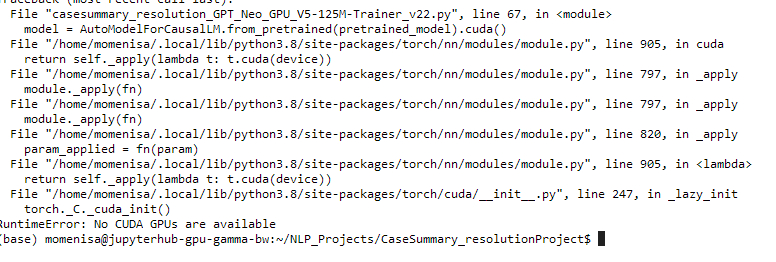

File "sTrain.py", line 37, in <module> torch.cuda.set_device(gpuid) File "/home/user/miniconda3/envs/deeplearningenv/lib/python3.6/site-packages/torch/cuda/__init__.py", line 263, in set_device torch._C._cuda_setDevice(device) File "/home/user/miniconda3/envs/deeplearningenv/lib/python3.6/site-packages/torch/cuda/__init__.py", line 172, in _lazy_init torch._C._cuda_init() RuntimeError: No CUDA GPUs are available

Any guidance would be very helpful. I tried uninstalling cudatookit, pytorch, and torchvision and reinstalling with conda install pytorch torchvision cudatoolkit=10.1 but I get the same error. I also the GPU appears in the device manager menu.

I’m not sure if this will aid in finding a solution but, trying torch.cuda.is_available() prints false.

Thank you in advance.