Thanks for the update!

Your method works for me and calculates the gradients for the outputs which are not clipped as expected:

model = nn.Linear(10, 10)

data = torch.randn(1, 10)

target = torch.rand(1, 10)

output = model(data)

print(output)

# tensor([[ 0.9157, -0.3162, -0.6357, 0.5272, -0.9480, 1.1473, 0.3734, 0.1775,

# 0.4148, -0.9100]], grad_fn=<AddmmBackward0>)

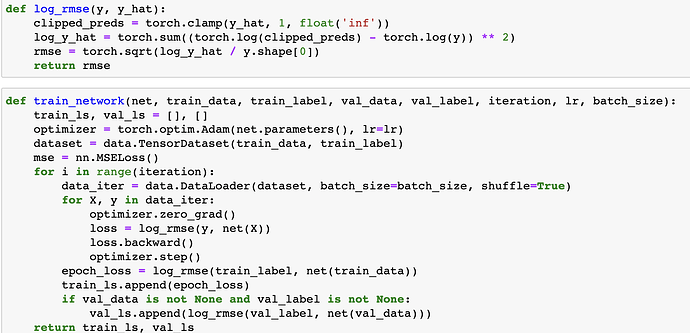

loss = log_rmse(target, output)

print(loss)

# tensor(2.3577, grad_fn=<SqrtBackward0>)

loss.backward()

print(model.weight.grad)

# tensor([[-0.0000, 0.0000, -0.0000, 0.0000, 0.0000, 0.0000, -0.0000, 0.0000,

# -0.0000, 0.0000],

# [-0.0000, 0.0000, -0.0000, 0.0000, 0.0000, 0.0000, -0.0000, 0.0000,

# -0.0000, 0.0000],

# [-0.0000, 0.0000, -0.0000, 0.0000, 0.0000, 0.0000, -0.0000, 0.0000,

# -0.0000, 0.0000],

# [-0.0000, 0.0000, -0.0000, 0.0000, 0.0000, 0.0000, -0.0000, 0.0000,

# -0.0000, 0.0000],

# [-0.0000, 0.0000, -0.0000, 0.0000, 0.0000, 0.0000, -0.0000, 0.0000,

# -0.0000, 0.0000],

# [-0.2285, 0.1268, -0.1046, 0.0679, 0.2792, 0.0948, -0.3489, 0.1557,

# -0.0147, 0.0082],

# [-0.0000, 0.0000, -0.0000, 0.0000, 0.0000, 0.0000, -0.0000, 0.0000,

# -0.0000, 0.0000],

# [-0.0000, 0.0000, -0.0000, 0.0000, 0.0000, 0.0000, -0.0000, 0.0000,

# -0.0000, 0.0000],

# [-0.0000, 0.0000, -0.0000, 0.0000, 0.0000, 0.0000, -0.0000, 0.0000,

# -0.0000, 0.0000],

# [-0.0000, 0.0000, -0.0000, 0.0000, 0.0000, 0.0000, -0.0000, 0.0000,

# -0.0000, 0.0000]])