So, the problem is “simple” a model for semantic segmentation I can readily train on my personal windows PC, albeit slow, does not converge on an obviously much more powerful DGX(GTX 970 vs v100) station running linux. By this I mean, while within one epoch on my PC I get an IoU of about 0.4 on the dgx the IoU is about 0.06 and contiues to stay low in further epochs. This is not only an issue in my iou calculation as the loss (standard CE), which is what i’m actually optimizing, gets stuck aswell on the dgx.

Both are running within a clean anaconda env installed with the exact same commands, the only (major) difference is the cudatoolkit versions 10.1 for the PC 10.0 for the dgx, and correspondingly pytorch 1.5 for the PC and 1.4 for the dgx. However this should not matter as I also tried it in environments with pytroch=1.3 and cudatoolkit=10.0. Furthermore, the issue persists when training on cpu instead of gpu, so neither the old drivers on the dgx station nor the cuda version should matter.

The random seeds are fixed aswell. What is more even when using no custom code, the problem persists. Dataloaders seem to behave properly on both platforms aswell

TL;DR exact same code doesn’t converge on different platform.

For reference the inner training loop which is pretty much the only “custom” code I run when using standard CE and torchvision.models.segmentation.fcn_resnet50(pretrained=False, progress=True, num_classes=34):

for i, data in enumerate(dataloader_train):

optim.zero_grad()

input = data['input'].to(device)

target = data['target'].to(device)

output = model(input)['out']

loss = loss_fn(output, target.long()) #unmodified CE

loss.backward()

losses.append(loss.item())

_, pred = output.detach().max(1)

optim.step()

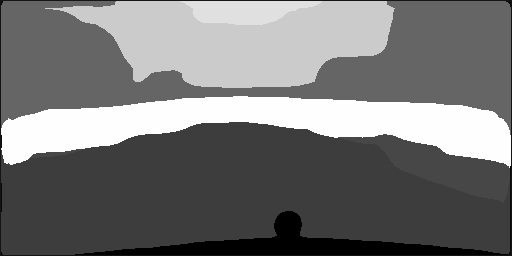

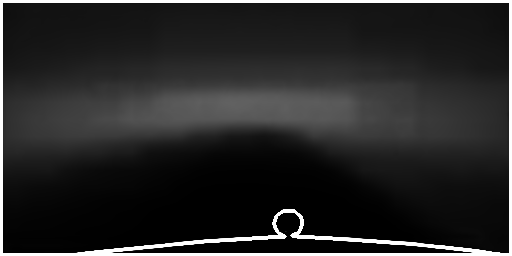

EDIT: The only thing properly learned is the ever present hood of the car the pictures are taken from. Which means something is indeed being leared. Lower lr and different optim don’t affect the problem. When considering the output i’d hazard a guess and say it’s for some reason sort of averaging over all the data, which of course would make sense if some of the hyperparameters or the model itself were badly chosen, but since this exact setup works on my PC that is verifiably not the case. This image should be the actual segmentation