Hi all!

I’m wondering is it possible to perform convolutions during the training of neural networks in which a convolution kernel is detached from the gradient tree.

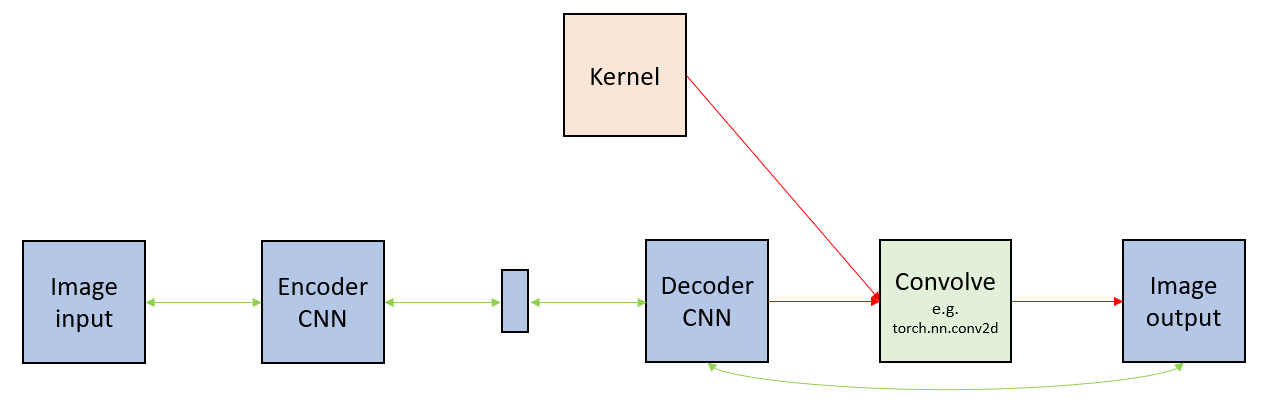

An example would be the autoencoder shown below:

In the example above, a convolution of the reconstructed image (exiting the decoder CNN subnet) is performed using a 2D kernel which is detached from the gradient tree.

In this way, the encodings (exiting the encoder CNN subnet) would be learned parameters/embeddings necessary to reconstruct an unconvolved image prior to the untracked convolution.

Forgetting use cases and why you may want to do this, my question is whether a torch.nn.ConvNd operation using a gradient-tree-detached kernel is something that the API allows, without breaking the gradient tree?

I know this is possible for simple operations such as multiplying/summing/subtracting/dividing parameters/weights by untracked tensors, but I wonder if it still holds for operations which use more complex operations rooted in the torch API, like convolutions.

Many thanks in advance for all your help!