I recently discovered some nondeterministic behaviour when using a jit script which is supposed to make the usage of torch.sigmoid more memory efficient, as described in Performance Tuning Guide — PyTorch Tutorials 2.2.0+cu121 documentation .

When executed on CPU both torch.tanh and torch.sigmoid lead to different results for the same input when comparing the first and second invocation. After that all invocations lead to the same result. The mean average error between first and second result is around 1e-11, but when used in a simple convolutional network with only 4 layers, this quickly becomes 1e-8.

This does not happen on GPU.

The fact that it stays the same result after the second invocation makes me think, it’s some sort of caching problem, but I wonder, why the first result wouldn’t be cached.

Has someone ever experienced something similar? Is there a way to fix this? Would it be affected by setting torch.use_deterministic_algorithms? I haven’t tried that yet…

Here is the jit script I invoke:

@torch.jit.script

def fused_add_tanh_sigmoid_multiply(input_a, input_b, n_channels, debug_name: str="None"):

n_channels_int = n_channels[0]

in_act = input_a + input_b

t_act = torch.tanh(in_act[:, :n_channels_int, :])

s_act = torch.sigmoid(in_act[:, n_channels_int:, :])

acts = t_act * s_act

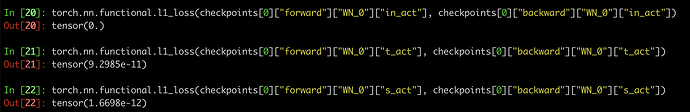

And this is the MAE between first and second invocation in the same input (in_act) in the same layer (0) in network WN (forward and backward have no meaning here, except for that backward holds the second invocation):