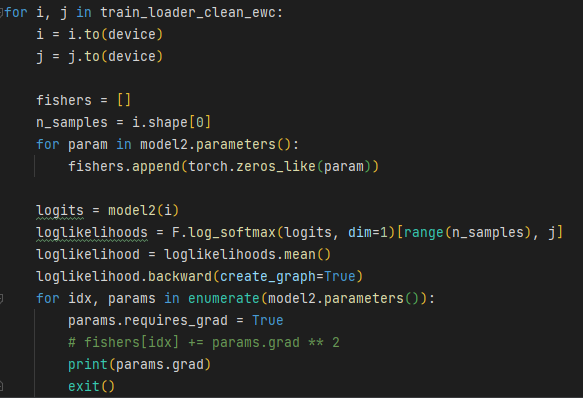

I am trying to calculate gradients wrt model’s parameters. But it is returning None value. What should I do ?

Thanks

Hi @bsg_ssg

None is returned when the grad attribute isn’t populated during a backward call because of requires_grad being False for those tensors (parameters in your case).

Going by your code, I would say the for loop where you set params.requires_grad = True should be placed before any loss calculation or backward propagation is done.

It’d be best if you just place the params.requires_grad = True for loop outside the outermost for loop which is -

for i, j in train_loader_clean_ewc:

Let me know if you still face any errors after doing this.

Hi @srishti-git1110

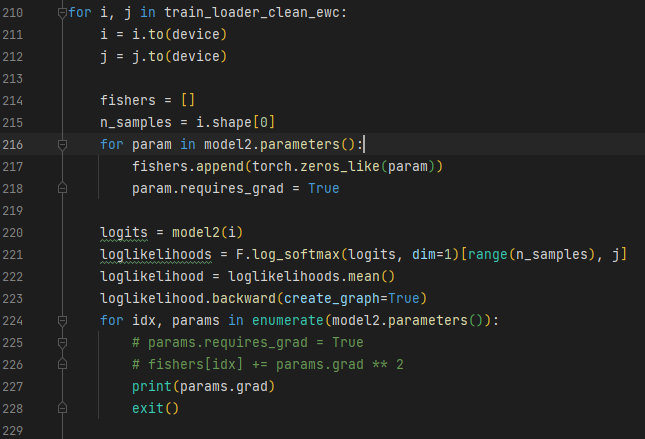

If I place params.requires_grad = True before the outermost loop then also it is throwing None. However, if I place it inside the second loop where params are defined , then it is working. Is it right way? Please let me know if I am making any wrong steps.

Thanks

Your current code looks fine to me.

And this is what I actually meant in my previous reply. I asked you to shift “the loop” containing requires_grad = True statement completely and not just that particular statement.

Now, since you’ve put it before doing any loss related calculations, it should be fine.

We basically need to set the requires_grad attribute to True for all the params, that’s why a for looping over params is needed.

[As a side note, it’d be best if you just place “the whole loop” setting the attribute requires_grad = True for all the params outside the outer for.]

Hi @srishti-git1110 ,

Sorry for the misreading. It is working fine now. Thanks for your help. ![]()