I am new to PyTorch and making a transition from TF/keras to PyTorch so I apologize if my question is very basic.

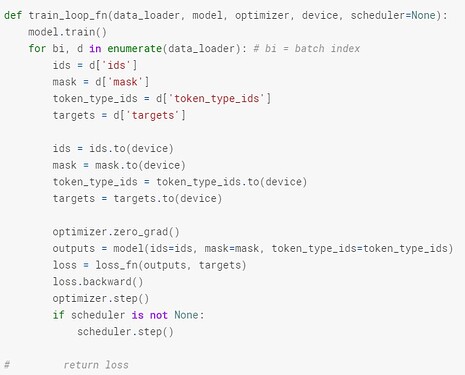

I have started with some tutorials and in one of them I have the following training loop function where it iterates over the dataset, trains the model for 1 epoch computes the loss and updates the weights.

So far so good. The problem I have is when I try to return the loss value from this function and print its value after each epoch (see below) the model seems to be not working anymore.

for epoch in range(EPOCHS):

train_loop_fn(train_data_loader, model, optimizer, device, scheduler)

# loss = train_loop_fn(train_data_loader, model, optimizer, device, scheduler)

outputs, targets = eval_loop_fn(valid_data_loader, model, device)

spear = []

for jj in range(targets.shape[1]):

p1 = list(targets[:, jj])

p2 = list(outputs[:, jj])

coef, _ = np.nan_to_num(stats.spearmanr(p1, p2))

spear.append(coef)

spear_mean = np.mean(spear)

#print(f'spear={spear_mean}\tLoss:{loss.item()}')

print(f'spear={spear_mean}')

When I don’t return the loss function, I can clearly see that the model is training because the spear_mean keeps increasing epoch by epoch but after I uncomment the lines in images and try to print loss.item() the code no longer follows a general pattern and I get random values of spear_mean and loss.item() in every epoch. I don’t really see what is going wrong here and why would returning the loss value mess things up here. Any help is greatly appreciated. Thank you in advance.