I am trying to normalize my targets (here landmark values) but to_tensor is not forcing the values to be between 0 and 1. How should that be enforced?

Also, am I looking for only one value for mean and one value for std, right? Each image has 4 landmarks and each landmark has x and y coordinates.

to_tensor = transforms.ToTensor()

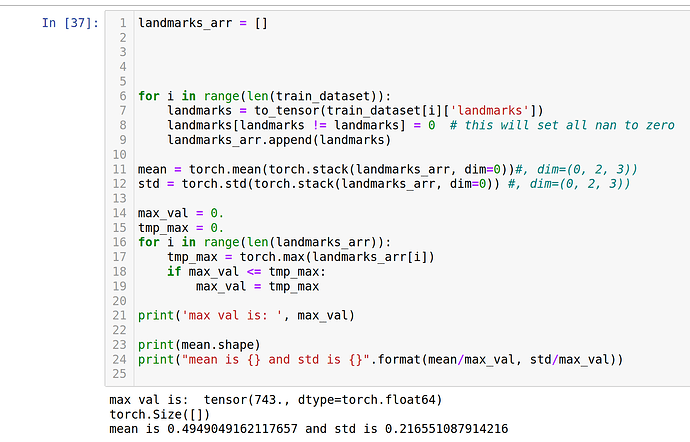

landmarks_arr = []

for i in range(len(train_dataset)):

landmarks = to_tensor(train_dataset[i]['landmarks'])

landmarks[landmarks != landmarks] = 0 # this will set all nan to zero

landmarks_arr.append(landmarks)

mean = torch.mean(torch.stack(landmarks_arr, dim=0))#, dim=(0, 2, 3))

std = torch.std(torch.stack(landmarks_arr, dim=0)) #, dim=(0, 2, 3))

print(mean.shape)

print("mean is {} and std is {}".format(mean, std))

result is:

torch.Size([])

mean is 367.7143527453419 and std is 160.8974583202625

This is how landmarks are:

landmarks_arr[0]

tensor([[[502.2869, 240.4949],

[688.0000, 293.0000],

[346.0000, 317.0000],

[560.8283, 322.6830]]], dtype=torch.float64)

On a side note, is this line even correct?

mean = torch.mean(torch.stack(landmarks_arr, dim=0))#, dim=(0, 2, 3))