import torch.nn as nn

class DebertaModel(nn.Module):init (self):init ()

def forward(self, ids, mask, token_type_ids):

_, pooled_output = self.deberta(

input_ids = ids,

attention_mask = mask,

token_type_ids = token_type_ids)

pooled_output = self.dropout(pooled_output)

outputs = self.classified(pooled_output)

return outputs

import torch.nn as nn

def loss_fn(outputs, target):

def train_fx(data_loader, model, optimizer, scheduler, device):

train_loss = 0

for bi, d in tqdm(enumerate(data_loader)):

ids = d['ids']

mask = d['mask']

token_type_ids = d['token_type_ids']

target = d['targets']

# putting them into the device

ids = ids.to(device, dtype=torch.long)

mask = mask.to(device, dtype=torch.long)

token_type_ids = token_type_ids.to(device, dtype=torch.long)

target = target.to(device, dtype=torch.long)

optimizer.zero_grad()

outputs = model(

ids = ids,

mask = mask,

token_type_ids = token_type_ids

)

loss = loss_fn(outputs, target)

loss.backward()

optimizer.step()

scheduler.step()

train_loss += loss.item()

train_loss = train_loss / len(data_loader)

return train_loss

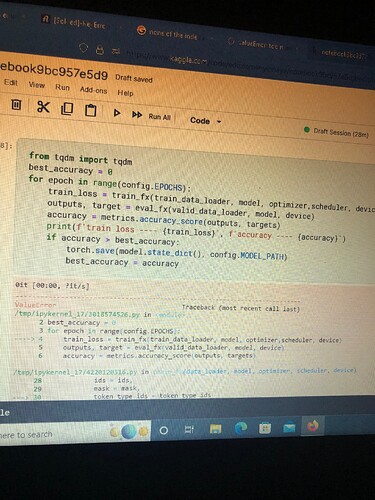

This is the issue from my model?

It’s unclear which line of code causes the issue but based on your screenshots it should be somewhere in train_fx.

Rexedoziem

October 5, 2022, 10:27am

4

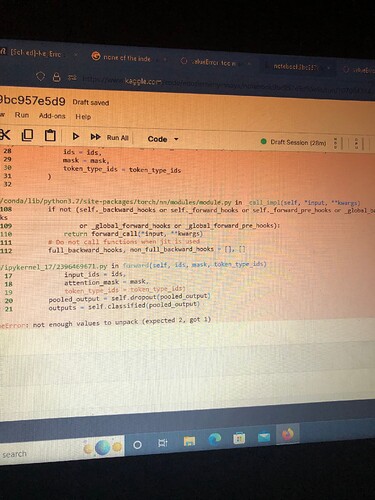

ValueError Traceback (most recent call last)

/tmp/ipykernel_17/4220120316.py in train_fx(data_loader, model, optimizer, scheduler, device)

/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs)

/tmp/ipykernel_17/2396469671.py in forward(self, ids, mask, token_type_ids)

ValueError: not enough values to unpack (expected 2, got 1)

Rexedoziem

October 5, 2022, 10:34am

5

Guess the error came from the model