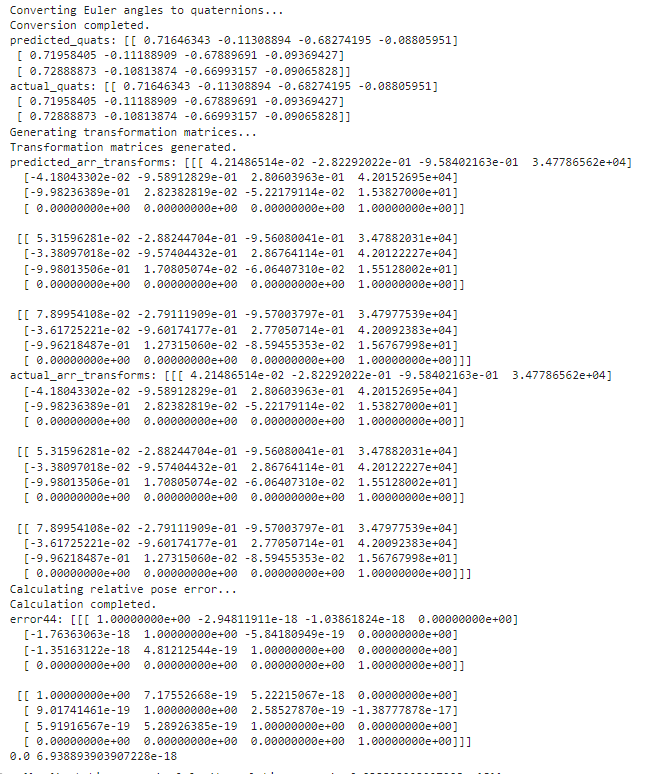

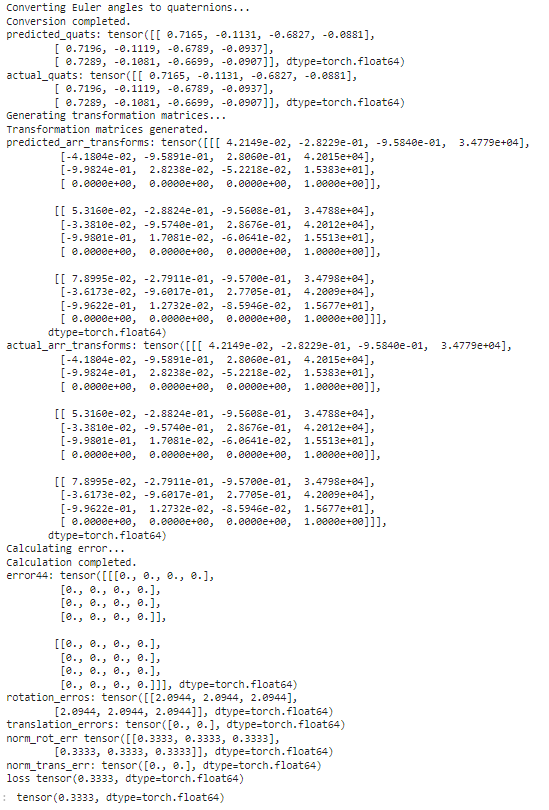

I am trying to implement a loss function. But the implementation in numpy is giving me absolute zero where as in torch i am getting 0.33333… . I’ve rechecked the implementation both are same and I am using float64 for both the implementations.

Is this normal or is there something I should be aware of?

I also wonder what impact does it have on my model. Even though predicted == actual the loss is never zero.

Please help me mitigate this.