Hello,

I want to learn from this link:

INSTALLING C++ DISTRIBUTIONS OF PYTORCH

How to create an application which will use the libTorch in order to inference my trained model which trained using the Torc Python APIs interface.

I successfully created the application based on the link instructions and successfully run it.

For further purposes I added one *.cu file because I want to add some CUDA logics which I will perform on the Tensor input before I will pass it to the model for the forward API.

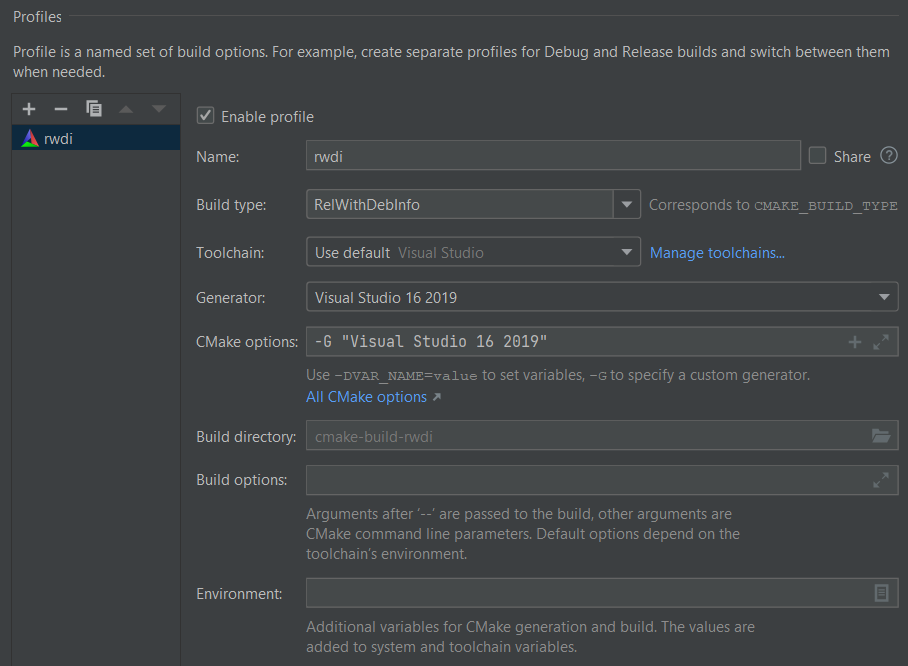

I updated my CMake file with all CMake CUDA commands in order to find it and activate the CMake cache generation process to find all required CUDA include and libraries files.

The CMake cache is successfully generated.

But when I start the build process I immediately get the following error:

nvcc fatal : A single input file is required for a non-link phase when an outputfile is specified

I found out that when I masked this CMake command:

target_link_libraries(${PROJECT_NAME} PUBLIC “${TORCH_LIBRARIES}”)

The nvcc error isn’t raised any more but then all torch symbols are unresolved so the linker fail and no *.exe is generated,

This is the value of the ${TORCH_LIBRARIES}:

TORCH_LIBRARIES = torch;torch_library;C:/libtorch/lib/c10.lib;C:/libtorch/lib/kineto.lib;C:\Program Files\NVIDIA Corporation\NvToolsExt/lib/x64/nvToolsExt64_1.lib;C:/Program Files/NVIDIA GPU Computing Toolkit/CUDA/v11.4/lib/x64/cudart_static.lib;

C:/libtorch/lib/caffe2_nvrtc.lib;C:/libtorch/lib/c10_cuda.lib

My setup is:

OS - Microsoft Windows 10 Enterprise 2016 LTSB

GPU - Quadro M2000M

CUDA vers - 11.4

Visual Studio - 2019

Python - 3.6.8

libTorch 1.10.1

I can share my entire CMake file and source code if it required but as I described above my source code is exactly as detailed in the installing link.

Please advice.