C:\Users\USER\Desktop\RVC1006Nvidia>runtime\python.exe infer-web.py --pycmd runtime\python.exe --port 7897

C:\Users\USER\Desktop\RVC1006Nvidia\runtime\lib\site-packages\torch\cuda_init_.py:173: UserWarning:

NVIDIA GeForce RTX 5090 with CUDA capability sm_120 is not compatible with the current PyTorch installation.

The current PyTorch install supports CUDA capabilities sm_37 sm_50 sm_60 sm_61 sm_70 sm_75 sm_80 sm_86 sm_90 compute_37.

If you want to use the NVIDIA GeForce RTX 5090 GPU with PyTorch, please check the instructions at Start Locally | PyTorch

warnings.warn(incompatible_device_warn.format(device_name, capability, " ".join(arch_list), device_name))

2025-04-10 18:55:22 | INFO | configs.config | Found GPU NVIDIA GeForce RTX 5090

is_half:True, device:cuda:0

Install the latest nightly binaries with CUDA 12.8 and it will work as the Blackwell support requires CUDA >= 12.8.

Python 3.12.8 (tags/v3.12.8:2dc476b, Dec 3 2024, 19:30:04) [MSC v.1942 64 bit (AMD64)] on win32

Type “help”, “copyright”, “credits” or “license” for more information.

import torch

print(torch.version)

2.8.0.dev20250408+cu128

It doesn’t work

Same error

The nightly binary itself works as confirmed e.g. here so I don’t know what the issue in your setup is.

The user in the linked post also claimed to have installed the right binary and later claimed:

Upgrading to the latest nightly (with sm_120 support) resolved that

so I also don’t fully understand what happened before.

C:\Users\USER\Desktop\RVC1006Nvidia>runtime\python.exe infer-web.py --pycmd runtime\python.exe --port 7897

C:\Users\USER\Desktop\RVC1006Nvidia\runtime\lib\site-packages\torch\cuda_init_.py:173: UserWarning:

NVIDIA GeForce RTX 5090 with CUDA capability sm_120 is not compatible with the current PyTorch installation.

The current PyTorch install supports CUDA capabilities sm_37 sm_50 sm_60 sm_61 sm_70 sm_75 sm_80 sm_86 sm_90 compute_37.

If you want to use the NVIDIA GeForce RTX 5090 GPU with PyTorch, please check the instructions at Start Locally | PyTorch

warnings.warn(incompatible_device_warn.format(device_name, capability, " ".join(arch_list), device_name))

2025-04-10 20:05:22 | INFO | configs.config | Found GPU NVIDIA GeForce RTX 5090

is_half:True, device:cuda:0

2025-04-10 20:05:24 | INFO | main | Use Language: en_US

Running on local URL: http://0.0.0.0:7897

2025-04-10 20:05:33 | INFO | infer.modules.vc.modules | Get sid: kikiV1.pth

2025-04-10 20:05:33 | INFO | infer.modules.vc.modules | Loading: assets/weights/kikiV1.pth

2025-04-10 20:05:34 | INFO | infer.modules.vc.modules | Select index: logs\kikiV1.index

2025-04-10 20:05:44 | INFO | infer.modules.vc.pipeline | Loading rmvpe model,assets/rmvpe/rmvpe.pt

C:\Users\USER\Desktop\RVC1006Nvidia>pause

Press any key to continue . . .

The problem could be with the software or the 5090 card.

Because when I change the card to a 4090, it works.

Is there a solution? I’ve tried everything.

You are still using older PyTorch builds so make sure a single binary built with CUDA 12.8 is installed.

Same issue

UserWarning:

NVIDIA GeForce RTX 5090 Laptop GPU with CUDA capability sm_120 is not compatible with the current PyTorch installation.

The current PyTorch install supports CUDA capabilities sm_50 sm_60 sm_61 sm_70 sm_75 sm_80 sm_86 sm_90.

If you want to use the NVIDIA GeForce RTX 5090 Laptop GPU GPU with PyTorch, please check the instructions at https://pytorch.org/get-started/locally/

pip3 list

Package Version

einops 0.8.1

filelock 3.18.0

flash_attn 2.7.4.post1

fsspec 2025.5.1

Jinja2 3.1.6

MarkupSafe 3.0.2

mpmath 1.3.0

networkx 3.5

numpy 2.1.2

pillow 11.0.0

pip 25.0.1

setuptools 80.9.0

sympy 1.14.0

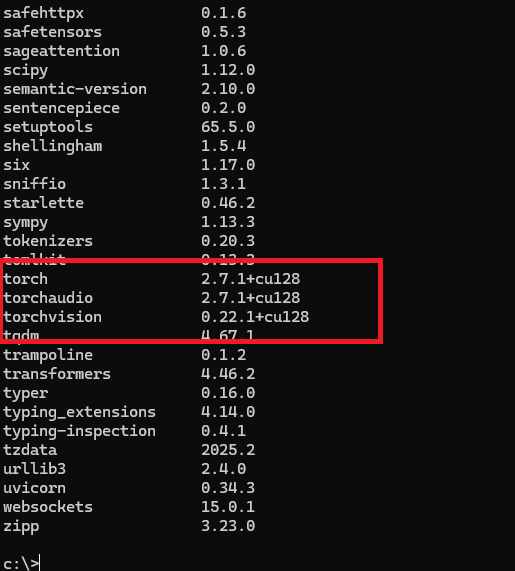

torch 2.7.1+cu128

torchaudio 2.7.1+cu128

torchvision 0.22.1+cu128

typing_extensions 4.14.0

python --version

Python 3.12.10

Dame as before: make sure a single and the correct PyTorch binary is used: NVIDIA GeForce RTX 5090 - #7 by ptrblck

How to do this? I already showed you that I have Python and Torch installed. Well, okay, I will attach a screenshot.

Yes, pip list shows the right version but does not account for other binaries found in e.g. the base env.