I am trying to find out best API to use to measure inference time. So I came up with simple matrix multiplication operation and used different API’s to measure the time. But I see that all the API’s are giving different results and I am not able to figure out exact reason behind this. Can someone please help me in this. ?

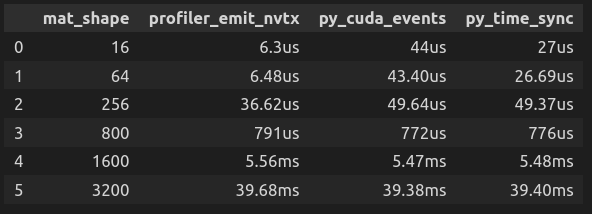

mat_shape : Matrix of different shape

profiler_emit_nvtx : Results from nvprof profile tool from NVIDIA

py_cuda_events : Results from torch.cuda.Event API

py_time_sync : Results from python time API with CUDA synchronization

I have share my code here tvm-explore/pytorch_benchmark_explore_v2.py at master · manojec054/tvm-explore · GitHub

How to run : nvprof --profile-from-start off --print-gpu-summary python pytorch_benchmark_explore_v2.py