Hello,

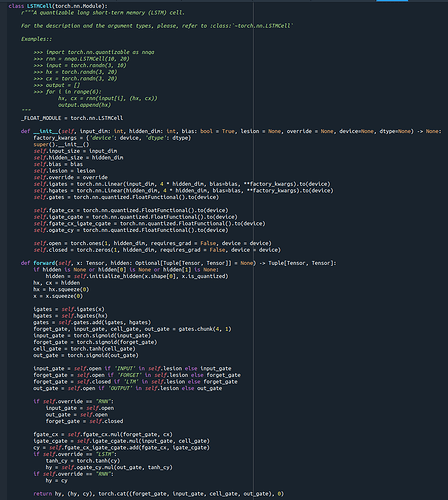

I am doing research on LSTMs and have made changes to the LSTMCell in quantizable/'modules/rnn.py.

I have added ‘if’ conditions that should functionally turn the LSTM into an RNN. Visualising the network activity shows everything being clamped to 1 or 0 as intended.

However this ‘handcrafted RNN’ is able to consistently learn an RL task that the official Pytorch RNN consistently fails on.

I am very confused as to what could be different between my ‘handcrafted RNN’ and the official Pytorch RNN. I use the tanh activation function for both and the same number of neurons. Clamping the forget gate activity to 1 indeed prevents learning, so I know my modifications are having an effect.

Any help in what could be going on under the hood would be greatly appreciated.