When we use dropout, then I have seen the input to dropout is our output from some layer like Conv2d, or MaxPool2d, and then certain number of elements are zeroed out, but instead of zeroing out these elements in our input tensor, why do we not instead zero out weight elements, indicating that we do not want certain weights to get updated, that is only a few weights would get updated, rest of them would either stay as they were or would be zeroed out, that is something like, drop weights.

This technique is called DropConnect and is described in this paper.

An implementation is discussed here.

I haven’t seen a wide adoption of this technique, but I cannot claim one is superior over the other.

hello, this lead to confusion, what is the insight behind using a dropout, or dropconnect, based neural network, or even pruning/sparse neural network, does this have to do with, several neurons staying in an inactive state, when we carry a particular task, that is of all the neurons in our brains, only a few of them are involved, when we carry a particular task, rest are idle. And then there are these different techniques to model the same concept mathematically? Plus is there a technique that does not use a hyperparameter to drop elements, like in dropout we need to specify dropout rate manually, but a technique that works without any manual hyperparameter input?

I wouldn’t go that far and compare each DL layer to the functionality of the brain.

The general idea of dropout (and probably dropconnect) is that you are seeing a lot of different “smaller” models during training (since you are removing either some activations or parameters, you are reducing the capacity of the model), while you are seeing an ensemble of models during validation and testing. This “ensemble” is not explicitly created by using several different models, but implicitly by disabling dropout during the validation and test phases.

Both techniques add some randomness to the training which is usually beneficial.

I’m not sure how to avoid specifying the drop probability. Maybe there are some approaches on making the drop probability trainable? If not, that would be certainly an interesting research area.

hello, thanks for your reply, when we update weight in the case of images, then shouldn’t it be like if one representation is updated then, it is not necessary that other representations would also be updated, for example, in the case of text, if we have a learnt representation of a word, lets say apple, then if we want to change this representation then we would update the weights associated with this word apple, and no other word in our vocab would be updated, for example if our vocab had another word grape, then its representation would not be updated, but when we train neural network on images, then if we want our neural network to change the learnt representation for one image, then why do we update all the weights, shouldn’t it be like, if we want to update representation of one image, then only certain weights associated with that image get updated, and all the other representations stay as they were, so notion of dropout/pruning would mean not changing representation of any other pattern, so if we had images of objects, lets say,  and

and  , then during training of neural network either only weights associated with

, then during training of neural network either only weights associated with  get updated, or weights associated with

get updated, or weights associated with  get updated, and not all the weights in our neural network.

get updated, and not all the weights in our neural network.

for example, if I have two, 1 channel, 5x5 images

x = torch.randn(2, 1, 5, 5)

then I would represent each of these 25 pixels in each image with lets say 10 numbers (weights), something like

pixel1 -> [10 weights]

pixel2 -> [10 weights]

...

pixel25 -> [10 weights]

and then during training, I update these, lets say I carry a dot product between neighbouring pixels for each 3x3 sized kernel, and get a learnt representation of each of these pixels, so after training, these pixels would be,

pixel1 -> [learnt 10 weights]

pixel2 -> [learnt 10 weights]

...

pixel25 -> [learnt 10 weights]

and then compute loss using crossentropy, and I only update representaion of pixels associate with the image that has been predicted incorrectly. So if my neural network predicted  incorrectly, then I would update the representaion of its 25 pixels, nothing else.

incorrectly, then I would update the representaion of its 25 pixels, nothing else.

currently, my neural netowrk look something like, but it give me high loss.

import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

# self.conv1 = nn.Conv2d(3, 6, 5)

# self.pool = nn.MaxPool2d(2, 2)

# self.conv2 = nn.Conv2d(6, 16, 5)

# self.fc1 = nn.Linear(16 * 5 * 5, 120)

# self.fc2 = nn.Linear(120, 84)

self.fc1 = nn.Linear(3072*10, 1000)

self.fc2 = nn.Linear(1000, 100)

self.fc3 = nn.Linear(100, 10)

self.embedding = nn.Embedding(512, 10)

def forward(self, x):

# print(x.shape)

# print(x.shape)

x = ((x.reshape(4, 3*32*32))*255 + 256).long()

# print(x)

x = self.embedding(x)

# print(x)

x = x.reshape(4, 3, 32, 32, 10)

y = x.unfold(2, 1, 1).unfold(3, 1, 1).reshape(4, 3, 32, 32, 10, 1, 1) # extract 1x1 patches

z = x.unfold(2, 3, 1).unfold(3, 3, 1).reshape(4, 3, 30, 30, 10, 9) # extract 3x3 patches

out = torch.einsum('abcdefg, abijek -> abcde', y, z) # learnt representation of each pixel

out = out.reshape(4, 3*32*32*10)

out = self.fc1(out)

out = self.fc2(out)

out = self.fc3(out)

# print('final output:', out)

return out

net = Net().cuda()

on this tutorial, https://pytorch.org/tutorials/beginner/blitz/cifar10_tutorial.html

it appear to me like, I do unfolding and einsum incorrectly.

That is basically the training procedure of a neural network.

Since the parameters are somehow connected to each other (e.g. via sequential conv layers), you all parameters would be updated.

How would you isolate the weights, which are only associated with the current class?

I no use any external filter as parameters in my neural network, I no use anything like,

nn.Conv2d(3, 10, 3)

because I do not want filters as parameters that update for every image, instead I represent each pixel in image with embedding.

for example, if I have image like,

x = torch.randn(1, 1, 5, 5)

then I represent each pixel with an embedding, so, if these 25 pixels where different then I would do,

y = nn.Embedding(25, 10)

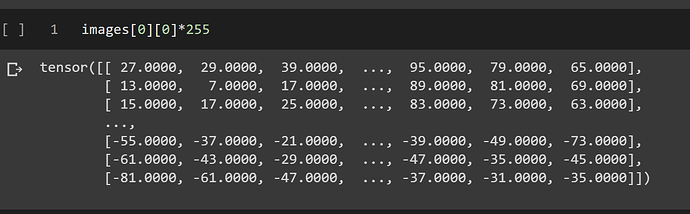

I use cifar10 dataset, so an image in it is like, (multiplied by 255 to get integer)

Some of these values are negative, so I add 256 to all of them, to make all value positive, and in my embedding, I use,

y = nn.Embedding(512, 10)

it appears to me that it has less than 512 variants of pixels, so one of them would be a white pixel, one of them would a black pixel and so on, each represented by 10 numbers.

so for each image, only pixels that are part of it would get updated, for example if in a dog image, there is no white pixel, then that embedding would stay as it is, no update.

During training phase, representation of each pixel is randomly initialized, so

white pixel -> [10 random numbers]

black pixel -> [10 random numbers]

if our neural network make wrong prediction, then these representations of pixels get updated, so after training,

white pixel -> [10 learnt numbers]

black pixel -> [10 learnt numbers]

and then carry on these learnt pixel representation to do testing.

My neural network is learning the representation of each pixel, that is just like we use word embedding for text, I use pixel embedding for image.

Am I doing something wrong here?

I don’t think this is the case.

If you pass the embedding outputs to e.g. a linear layer, each pixel “location” would be updated, but for each pixel only the current “input word” would be updated.

Basically you are creating a lookup table for each possible pixel value (in [0, 255]), where only the currently used input value embedding will be updated.

hello, the technique I use does start learning representation of pixels, and it give me around 30-32% accuracy on cifar10 dataset, but the problem is how do I make it to learn combined representation of pixels, for example, it has learnt what

a white pixel is, what a black pixel is, but when shown a combination of these pixels, then it has no idea what to do. To do this, I increase my embedding layer size to 1000, like,

nn.Embedding(1000, 10)

but what more patterns do I make it learn, for example, if I want to make it have a learnt representation for a combination of a 2x2 patch like,

[BW

BW] -> [10 learnt numbers]

or a 3x3 patch like,

[WBW

BWB

WBW] -> [10 learnt numbers]

But there could be so many of these patches.

For each pixel location you would already have a lookup table for each possible value (256 * number of pixels). If you would like to take into account all combinations as well as patches, this number might grow pretty quickly.

How did you calculate the input dimension of 1000?

Not that even the first approach would update the embeddings really sparse and a lot of the inputs most likely won’t be seen at all during training thus never updated.

hello, is 343200 parameters moderate, for example, if I have a binary image,

[WBW

BWB

WBW]

then,

total information in this image is, that

1x1 -> [B] -> appear at [0, 1], [1, 0], [1, 2], [2, 1]

[W] -> [0, 0], [0, 2], [1, 1], [2, 0], [2, 2]

2x2 -> [BW

WB] -> [0, 1], [1, 0]

[WB

BW] -> [0, 0], [1, 1]

3x3 -> [WBW

BWB

WBW] -> [0, 0]

that is 14 patterns, if each pattern represented with 10 numbers, then, 14*10 parameters.

for 3 channel 32x32 image, it would be,like,

3*(32*32 + 31*31 + ... + 1*1)*10

343200 parameters.

plus do there be a way to extract all nxn sized patches with the index.

I could incude,

1x2 -> [WB] -> [0, 0], [1, 1], [2, 0]

[BW] -> [0, 1], [1, 0], [2, 1]

2x1 -> [W

B] -> [0, 0], [0, 2], [1, 1]

[B

W] -> [0, 1], [1, 0], [1, 2]

1x3 -> [WBW] -> [0, 0], [0, 2]

[BWB] -> [1, 0]

3x1 -> [W

B

W] -> [0, 0], [0, 2]

[B

W

B] -> [0, 1]

also, that would make even more parameters.

the number 1000 in the previous post would be a hyperparameter, I mention it at random.

Could you please post a dummy code, which shows the usage of your approach?

I stuck in one place, if I have a tensor like,

a = torch.randn(3, 3)

then how do I get, beginning index of each patch, if I extract all 2x2 patches from it, then I want [0, 0] for first patch, [0, 1] for 2nd patch, and so on, [1, 0] , [1, 1].

or in other words extract patches, along with location of patches in the original tensor.

for 1d patch I am able to extract location using, torch.where, but it does not work for >1d patch.

hello, my neural network task I describe in these steps,

- to extract as many patches I can from the image, currently I only consider square patches. (to make it more complicated, I could extract rectangular patches also, maybe in the future, there would be a way to extract non square/rectangular patch also).

- for each of these patches, I also extract location

- based on this location, I update the representation of each patch, that is if I extracted

[X] -> appears at index [0, 0]

[Y] -> appears at index [1, 1]

[XY

YX] -> appears at index [5, 5] (consider the location of first pixel for 2x2 patch)

then the first two patches would contribute to updating each others representation (I do a dot product between their embedding for this) as they are near, but the third patch is far from first two patches (how much far away patch I consider is a hyperparameter), so it would not contribute to the representation of first two patch (I would not take dot product of 3rd patch with 1st patch or 2nd patch)

- I reduce the representation of the patch that represents the entire image to number of classes in my train set, and get a loss -> backprop -> optimize

- after this all the patches that I have extracted from the image would have a learnt representation

- during the test phase, when given a new image, then I again extract as many patches I can, along with location.

- some of these patches would have a learnt representation, as they were found in training images, so my neural network has some idea, what does that pattern mean.

- I once again apply step 3) for test image, and reduce representation of the patch that represents the entire image into number of classes -> get accuracy.

the part where I am stuck is, to how to extract location of each patch, and represent it in embedding, that is something like,

[X] -> appears at index [0, 0] -> represented by embedding1 of dim 10 (10 is a hyperparameter)

[XY

YX] -> appears at index [5, 5] -> represented by embedding2 of dim 10

plus I need to maintain the location, to do dot product, which information would be lost as soon as I represent a patch as an embedding.

I’m still unsure, how this method should work and would need to see at least pseudo code for it.

E.g. I don’t know, what [X], [XY, YX] stand for and why the indices change for the latter case suddenly.

I would be helpful, if you could all at least pseudo code with tensors and their shape for each step and what the desired output should look like.

hello, sorry my bad, I update the location, they would be patches, for example, if I have an input image,

[XYX

YXY

XYX] -> shape [3, 3]

then I would extract

[X] -> shape [1x1] -> location [0, 0], [0, 2] ...

[Y] -> shape [1x1] -> location [0, 1], [1, 1] ...

[XY

YX] -> shape [2x2] -> location [0, 0], [1, 1]

...

but this extraction of patches along with the location appear to be a memory intensive task, so I am stuck in the first step itself, all of the further steps only possible after embedding all the patches.

I do some more work on this, I share till where I reach, consider our input image to be, 3x3, with 3 variety of pixels, 0, 1, and 2.

image = torch.tensor([[0., 1., 2.],

[0., 2., 1.],

[0., 0., 0.]])

I extract location of each pixel, it look something like this,

location = torch.tensor([[[0., 0., 0., 0.], [0., 0., 0., 1.], [0., 0., 1., 0.]],

[[0., 1., 0., 0.], [0., 1., 0., 1.], [0., 1., 1., 0.]],

[[1., 0., 0., 0.], [1., 0., 0., 1.], [1., 0., 1., 0.]]])

first 2 bits row, last 2 bits column

get an embedded_image_with_location,

tensor([[[0, 0, 0, 0, 0, 0],

[0, 1, 0, 0, 0, 1],

[1, 0, 0, 0, 1, 0]],

[[0, 0, 0, 1, 0, 0],

[1, 0, 0, 1, 0, 1],

[0, 1, 0, 1, 1, 0]],

[[0, 0, 1, 0, 0, 0],

[0, 0, 1, 0, 0, 1],

[0, 0, 1, 0, 1, 0]]], dtype=torch.int32)

first two bit indicate pixel, last four bit indicate location

next, i create a pattern extractor, suppose it extract 5 patterns from our input image,

(how many patterns do it extract, and what all patterns do it extract be a confusion, for example, when we see a human face, then we extract patterns like, eye, nose, ear, hair, and so on)

[tensor([[0, 0, 0, 0, 0, 0],

[0, 1, 0, 0, 0, 1],

[1, 0, 0, 0, 1, 0],

[1, 0, 0, 1, 0, 1],

[0, 0, 1, 0, 0, 0],

[0, 0, 1, 0, 0, 1]], dtype=torch.int32),

tensor([[0, 0, 0, 1, 0, 0]], dtype=torch.int32),

tensor([[0, 0, 0, 0, 0, 0],

[0, 1, 0, 0, 0, 1],

[0, 0, 1, 0, 0, 0]], dtype=torch.int32),

tensor([[0, 1, 0, 0, 0, 1]], dtype=torch.int32),

tensor([[0, 0, 1, 0, 0, 0]], dtype=torch.int32)]

next, I create a link dictionary, that work something like this, (for example, ear be part of face, pupil be part of eye, and so on)

defaultdict(list, {0: [5], 1: [5], 2: [0, 5], 3: [0, 2, 5], 4: [0, 2, 5]})

here, 5 represent entire image,

0 be a part of 5

2 be a part of 0 and 5, and so on.

now, the task of our neural network be to update this link dictionary, if told that this image belong to class lets say 7, then the updated link dictionary look like, (if the image was of a human face, then 7 represent human face, and the image be linked to this class, the class be linked to this image)

defaultdict(list, {0: [5], 1: [5], 2: [0, 5], 3: [0, 2, 5], 4: [0, 2, 5], 5: [7], 7: [5]})

there be a 2 way link between 5 and 7.

when shown a variation of the same image, then we again, extract patterns from the image, search within the link dictionary for a pattern, that be in training image, and test image, (for example, if in the test set, there is a variation of a human face, then it extract pattern, and search for pattern in the link dictionary) and the closest class that we get (that be minimum number of links away) be the prediction.

I do not know what be parameter in this case, and what be the way to extract patterns from input image.